Maissam Barkeshli

@MBarkeshli

Visiting Researcher @ Meta FAIR. Professor of Physics @ University of Maryland & Joint Quantum Institute. Previously @ Berkeley,MIT,Stanford,Microsoft Station Q

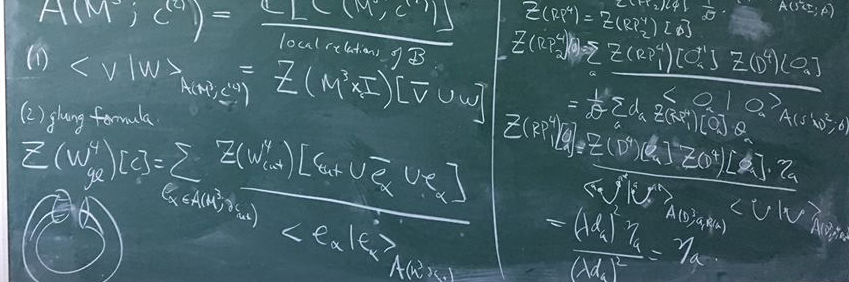

An absolutely incredible, highly interconnected web of ideas connecting some of the most important discoveries of late twentieth century physics and mathematics. This is an extremely abridged, biased history (1970-2010) with many truly ground-breaking works still not mentioned:

well said @DimaKrotov

Nice article! I appreciate that it mentions my work and the work of my students. I want to add to it. It is true that there is some inspiration from spin glasses, but Hopfield is much bigger than spin glasses. The key ideas that resurrected artificial neural networks in 1982…

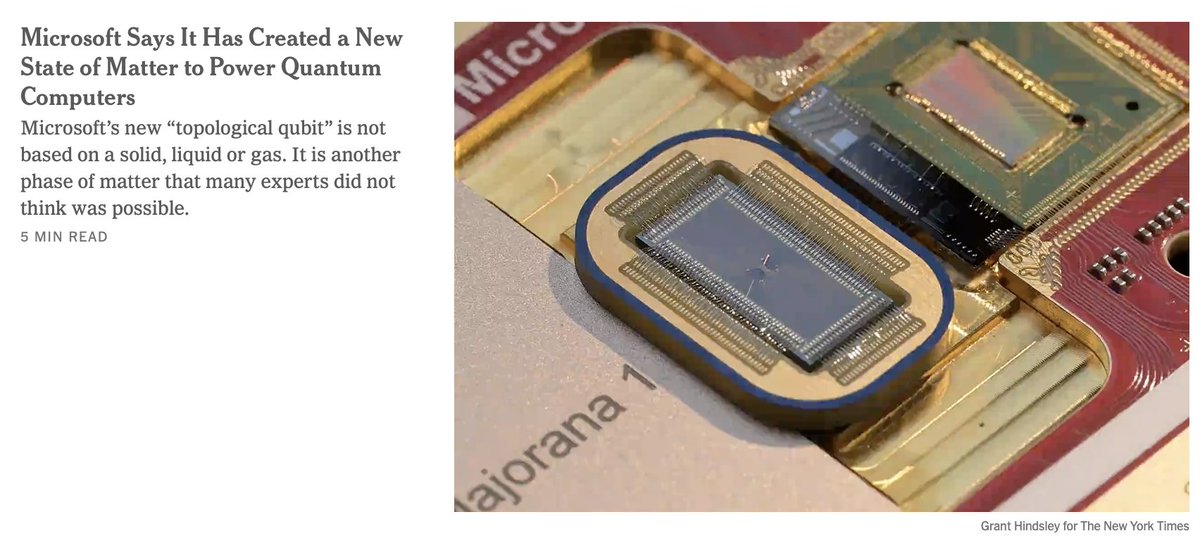

This is a bizarre headline from the @nytimes , given that the @Nature paper published today does not make this bold claim at all. If a topological qubit were ever actually demonstrated, it would be a pinnacle of human achievement. But today is apparently not the day.

🤖 Transformers can write poetry, code, and generate stunning art, but can they predict seemingly random numbers? We show that they learn to predict simple PRNGs (LCGs) by figuring out prime factorization on their own!🤯 Find Darshil tomorrow, 11am at #ICML2025 poster session!

🚨 ICML 2025 🚨 I'll be at @icmlconf Mon-Fri. DM if you'd like to chat!☕️ Also come check out our poster on cracking Pseudo-Random Number Generators with Transformers! 🕚 Tuesday @ 11am #⃣ E-1206 🔗arxiv.org/abs/2502.10390

New paper! Collaboration with @TianyuHe_ and Aditya Cowsik. Thread.🧵

Excited to share our paper "Universal Sharpness Dynamics..." is accepted to #ICLR2025! Neural net training exhibits rich curvature (sharpness) dynamics (sharpness reduction, progressive sharpening, Edge of Stability)- but why?🤔 We show that a minimal model captures it all! 1/n

Chetan Nayak overviews unpublished results by @MSFTResearch on Majorana qubits.

Adam is one of the most popular optimization algorithms in deep learning but it is very memory intensive. We did some analysis to understand how much of the second moment information can be compressed, leading to SlimAdam. Thanks @dayal_kalra @jwkirchenbauer @tomgoldsteincs

Low-memory optimizers sometimes match Adam but aren't as reliable, making practitioners reluctant to use them. We examine when Adam's second moments can be compressed during training. We also introduce SlimAdam, which compresses moments when feasible & preserves when detrimental

Where would we as a society be without math? It is a travesty that our entire public investment in math is ONLY $250M (0.004% of federal budget) given ROI is incredible. Examples: 1) elliptic curves -> cryptography 2) error correcting codes -> communication 3) information…

The US National Science Foundation spends about $250 million (yes, that’s “million” with an “m”) funding math research (pure and applied) per year. ROI is actually incredible.

Overall, I believe it is imperative to pursue a new unified Science of Intelligence that can help us understand and improve both biological and artificial systems alike. It must be done out in the open and will depend crucially on public investment in fundamental science. Such…

I'll be at #NeurIPS2024 this week, presenting our work tomorrow on the mechanisms of warmup! openreview.net/forum?id=NVl4S… 📍 West Ballroom A-D (#5907) 📅 Wed, Dec 11 ⏰ 4:30 PM - 7:30 PM PST Looking forward to engaging discussions!