Paul Linton

@LintonVision

3D Visual Experience / PI + NOMIS Fellow @ItalianAcademy / Presidential Scholar @columbiacss / Affiliate @KriegeskorteLab + @ZuckermanBrain

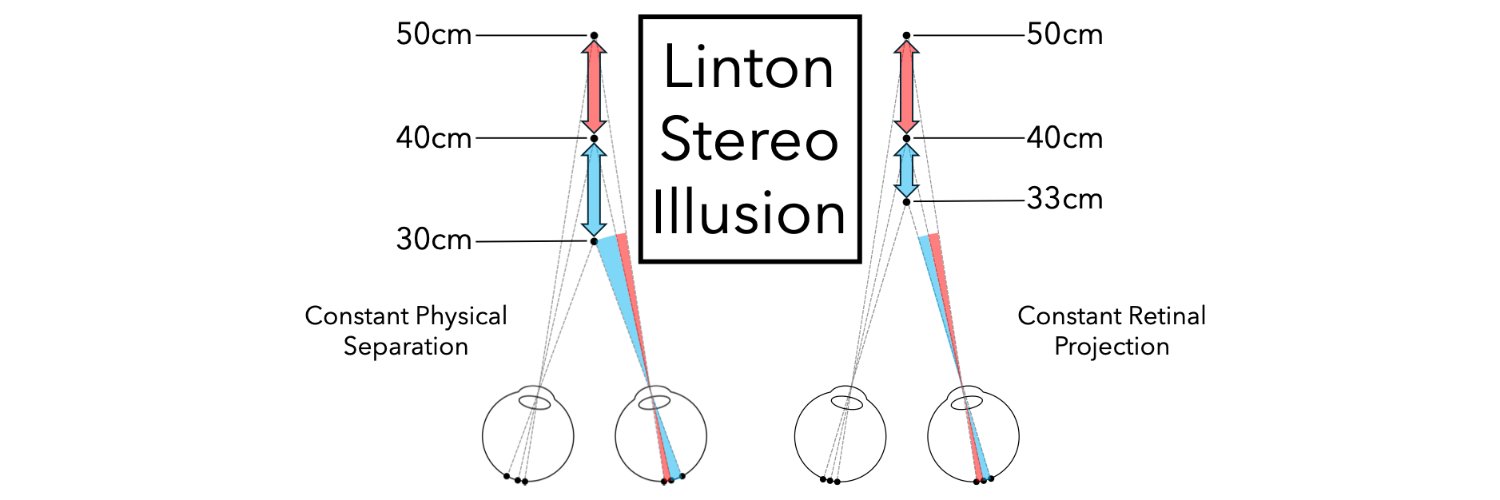

VSS Demo Night!! “FIVE ILLUSIONS CHALLENGE OUR UNDERSTANDING OF VISUAL EXPERIENCE” #VSS2025 @VSSMtg Thread below 🧵👇

Here's a third application of our new world modeling technology - to object grouping. In a sense this completes the video scene understanding trifecta of 3D shape, motion, and now object individualization. From a technical perspective, the core innovation is the idea of…

AI models segment scenes based on how things appear, but babies segment based on what moves together. We utilize a visual world model that our lab has been developing, to capture this concept — and what's cool is that it beats SOTA models on zero-shot segmentation and physical…

Over the past 18 months my lab has been developing a new approach to visual world modeling. There will be a magnum opus that ties it all together out in the next couple of weeks. But for now there are some individual application papers that have poked out.

📷 New Preprint: SOTA optical flow extraction from pre-trained generative video models! While it seems intuitive that video models grasp optical flow, extracting that understanding has proven surprisingly elusive.

"What does primary visual cortex (V1) do?" -- youtu.be/y0HFXKK72dg -- by the Student Network for Neuroscience. Half a century ago, Hubel & Wiesel discovered how V1 maps visual inputs to neural responses, this video addresses what V1 neural activities do for behavior. It is…

I am preparing and looking forward to speak on "What does primary visual cortex (V1) do?" tomorrow (Thursday) 6 pm on this student organized event, event link here forms.gle/dGZ5XJ7GHGHJGp…

This 3-minute video youtube.com/watch?v=xIHY9z… gives a quick introduction to Zhaoping, L. (2025) Testing the top-down feedback in the central visual field using the reversed depth illusion iScience, Volume 28, Issue 4, 112223, cell.com/iscience/fullt…

A great program for Systems Vision Science symposium, Aug. 20-22, shorturl.at/S3dxs welcome to participate by registering until we reach capacity (100 people), We have: Tadashi Isa, David Brainard, Peter Dayan, Sheng He, Jonathan Victor, Jan Koenderink, Andrea von Doorn,…

The illusion of the year contest in the New York Times!!! nytimes.com/2025/06/26/sci… Vote now: vote.illusionoftheyear.com

We proudly present the static spin illusion - a finalist of the Best Illusion of The Year Contest. You perceive a rotation? There is none! Saleeta Qadir student at @UniFAU built this illusion using computer vision and image processing - please vote for her vote.illusionoftheyear.com

How do object representations emerge in the developing brain? Our GAC workshop at #CCN2025 brings together behavior, neuroimaging & modeling to test competing hypotheses. Join the conversation: sites.google.com/ccneuro.org/ga… #VisionScience #DevNeuro #NeuralNets

👁️ Synchronization test #1 : Video+LIDAR integration in #Miraflores; Lima, Peru 🇵🇪. Object Detection+Classification, Surface Normal Estimation, Depth Estimation & 3D Point Cloud. This clip shows an integration of both our tech stack with @MultiPacha from our first mapping session…

Honored to have been featured on the front page of @EPFL_en's website! Thank you EPFL for the fantastic resources that made this work possible.

👁️ 🤖 A study from our school reveals why humans excel at recognizing objects from fragments while AI struggles, highlighting the critical role of contour integration in human vision. go.epfl.ch/ZEB-en

For seven years I’ve worked with an incredible team creating neurotech for human-computer input that ‘just works’ through noninvasive muscle recordings (sEMG) from a wristband. Our @Nature paper now publicly shares the significant advances we’ve made.

We’re thrilled to see our advanced ML models and EMG hardware — that transform neural signals controlling muscles at the wrist into commands that seamlessly drive computer interactions — appearing in the latest edition of @Nature. Read the story: nature.com/articles/s4158… Find…

This is a great opportunity. I am collaborating with Danyal on work closely related to this and he is full of great ideas.

I'm hiring a @ARIA_Research funded position at @imperialcollege @ImperialEEE. Broad in scope + flexible on topics! Exciting projects on intersection of neural networks & new AI accelerators, from a HW/SW co-design perspective. Join us 🚀: imperial.ac.uk/jobs/search-jo…

👁️🔬 Excited to welcome Austin Roorda as the 2025 Boynton Lecturer at Optica FVM! A pioneer in adaptive optics and retinal imaging, his work has reshaped how we see the eye—and how the eye sees. 🎤 Join us Oct 2–5 at UMN 🔗 opticafallvisionmeeting.org

Announcing the new "Sensorimotor AI" Journal Club — please share/repost! w/ Kaylene Stocking, @TommSalvatori, and @EliSennesh Details + sign up link in the first reply 👇

"Perception as Inference" is a century-old idea that has inspired all major theories in neuroscience 🧠 — including: ✅ Sparse Coding ✅ Predictive Coding ✅ Free Energy Principle & more! In my new blog post, I build the intuition behind this idea from ground up 👉[1/6]🧵

There is compelling evidence from both human cognition and now emerging AI models that 3D generation is drastically more data-efficient than other modalities. By adulthood, humans have passively observed at least 50 million images through their senses (approximately 166,000…

#ICML #cognition #GrowAI We spent 2 years carefully curated every single experiment (i.e. object permanence, A-not-B task, visual cliff task) in this dataset (total: 1503 classic experiments spanning 12 core cognitive concepts). We spent another year to get 230 MLLMs evaluated…

Our paper on learning controllable 3D robot models from vision is published in Nature! Huge congrats to Lester and the team, @annan__zhang, @BoyuanChen0, Hanna Matusik, Chao Liu, and Daniela Rus!! Learning joint world models for the environment & the agent is super exciting :)

Now in Nature! 🚀 Our method learns a controllable 3D model of any robot from vision, enabling single-camera closed-loop control at test time! This includes robots previously uncontrollable, soft, and bio-inspired, potentially lowering the barrier of entry to automation! Paper:…

Here is the call for papers for a special issue on Infant Consciousness that I am co-editing with Sascha Fink and @joel_frohlich to be published in the journal Philosophy and the Mind Sciences. Submissions are due by October 15th 2025. ≠PhiMiSci philosophymindscience.org/index.php/phim…

For some reason these papers were just archived on philpapers, so I am sharing a link to the volume here. Excellent collection of essays and commentaries (incl. one by yours truly) on recent work in the philosophy of perception. link.springer.com/book/10.1007/9…