Justine Tunney

@JustineTunney

I built a C library that lets you compile 12kb static binaries that run natively on Linux, Mac, Windows, FreeBSD, OpenBSD, NetBSD and BIOS using just GCC/Clang.

That's how you know they're not crooked. Meanwhile, here in the SF Bay Area, I've seen elected officials get paid 4 figure salaries to manage 9 figure budgets. x.com/kingofthecoast…

Top pay in the Singaporean government is kind of crazy. Base salary tied to the top 0.02% of earners, and bonuses tied to macroeconomic aggregates.

I just learned that the @llamafile project I did for Mozilla Builders has been adopted by 32% of organizations. That’s more popular in the cloud than Anthropic! It goes to show that local AI with fast CPU prefill and RHEL5 support is a winning formula. wiz.io/reports/the-st…

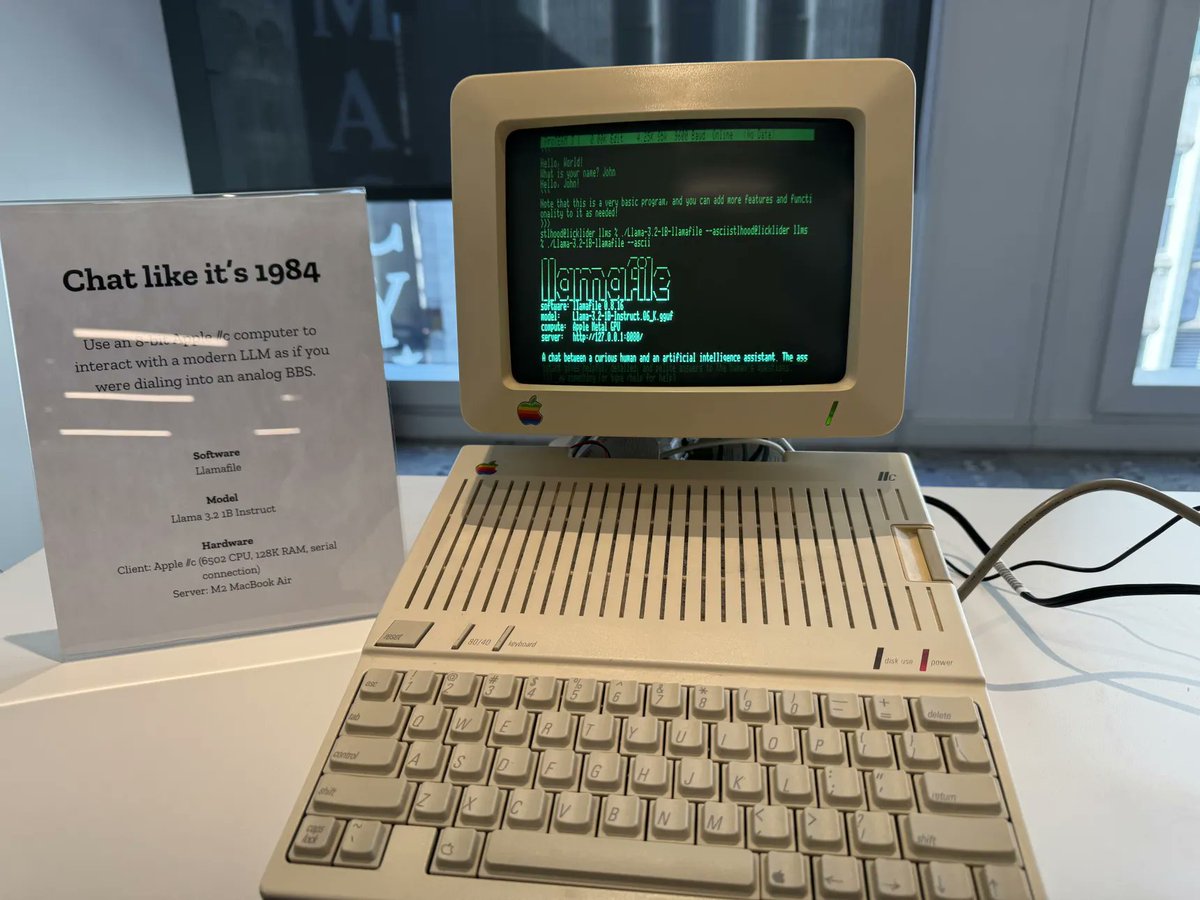

Just got back from Mozilla Builders Demo Day. Here's some photos of the @llamafile booth as well as slides from an @openwebui talk via @MattBCool.

That's saying a lot for C++ code. x.com/blu_blood_hero…

Wut? I'm not C++ dev and I can read the code without trouble 👀🤔

I built a new JSON library. It runs fast, builds fast, is easy to modify, fully passes JSONTestSuite, and is fuzzed by AFL. github.com/jart/json.cpp/

I wrote a blog post about all the weird programming language syntax I encountered while building a syntax highlighter for @llamafile. Read justine.lol/lex/

Mozilla's new blog post w/ executive summary on some key progress we've made on @llamafile the past several months. hacks.mozilla.org/2024/10/llamaf…

Llamafile 0.8.14 is out, featuring a brand-new command line chat interface github.com/Mozilla-Ocho/l…

I built a new chatbot interface for llamafile, based on bestline (h/t @antirez). Try it out with LLaMA 3.2. You need 8GB+ of RAM and a Linux/Windows/Mac PC. huggingface.co/Mozilla/Llama-…

I wrote a new blog post about my work on mutexes, and it's a great conversation starter for any engineer. justine.lol/mutex/

Mozilla just kicked off their Builders Accelerator where they've sponsored 14 local AI projects like @OpenWebUI blog.mozilla.org/en/mozilla/14-…

You can now use 1.58 bit LLMs with llamafile. I just uploaded TriLM. Its weights are -1 / 0 / +1 just like the Soviet Setun. It comes in 8 different sizes (78MB to 1.3GB) and has good benchmark scores on four CPUs. huggingface.co/Mozilla/TriLM-…

I just launched whisperfile which is the easiest way to turn audio into text. You download one file that embeds OpenAI's Whisper model and runs 100% locally. It'll even translate non-English into English while it's transcribing. huggingface.co/Mozilla/whispe…

I've just released llamafile 0.8.13 which has support for the latest models, quality improvements, and new commands to try like whisperfile (speech to text / translation) and sdfile (image generation). I've also tripled the performance of my new http server for embeddings.…

I've uploaded fresh new llamafiles for Gemma 2B Instruct. This model was released by Google a few weeks ago. I've been having a lot of fun talking to this model using the llamafile web GUI about Google. It's very snappy, even on CPU, which is part thanks to my new high-quality…

I just wrote a change making GeLU 2x to 8x faster on CPU in llama.cpp and llamafile, with 8192x better accuracy. It's the world's most popular activation function. Now it has clean self-contained SSE2, AVX2, AVX512, ARM NEON impls you can copy and paste. github.com/ggerganov/llam…

I just wrote a change adding native support for Actually Portable Executable to the FreeBSD Kernel. Thanks to kernelspace loading, fat binaries will be able to load in microseconds without needing to go through /bin/sh. github.com/freebsd/freebs…

Are you building an open source AI project like llamafile? Mozilla has $100k of non-dilutive funding burning a hole in their pocket, waiting for you. I can attest that they're a good friend to have on your side. Learn more and apply: future.mozilla.org/builders/

I've just uploaded llamafiles for Google's new Gemma2 language model. This is the 27B model that folks have been saying is better than 70B and 104B models like LLaMA3 and Command-R+. People are even saying it's better than the original GPT4! Now you can run it air-gapped on your…

I've just uploaded llamafiles for Facebook's new LLM Compiler model. It's able to optimize assembly, LLVM IR, and compile C. huggingface.co/Mozilla/llm-co… This model is sensitive. You must follow the prompt format exactly. It has a strong affinity for the way clang -S output looks.…