Jaap Jumelet

@JumeletJ

Post-doc with @AriannaBisazza in Groningen. PhD with Jelle Zuidema at the ILLC, UvA. I investigate linguistic questions using current NLP methods.

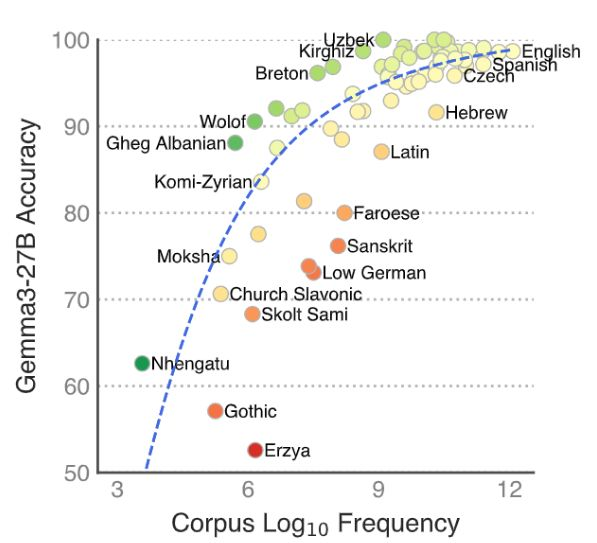

✨New paper ✨ Introducing 🌍MultiBLiMP 1.0: A Massively Multilingual Benchmark of Minimal Pairs for Subject-Verb Agreement, covering 101 languages! We present over 125,000 minimal pairs and evaluate 17 LLMs, finding that support is still lacking for many languages. 🧵⬇️

Happy to introduce TurBLiMP, the 1st benchmark of minimal pairs for free-order, morphologically rich Turkish! Pre-print: arxiv.org/abs/2506.13487 Fruit of an almost year-long project by amazing MS student Ezgi Basar, in collab w/ @FrancescaPado15 @JumeletJ

Close your books, test time! The evaluation pipelines are out, baselines are released and the challenge is on. There is still time to join and we are excited to learn from you on pretraining and the gaps between humans and models. *Don't forget to fast-eval on checkpoints

🙇♂️NEW PAPER @ NAACL2025🔆 What type of learning is in-context learning? Is ICL a kind of gradient descent? Answer: the phenomenon of syntactic priming from psycholinguistics tells us that ICL behaves like error-driven learning! Paper: aclanthology.org/2025.naacl-lon… 🧵1/n

✈️ Headed to @iclr_conf — whether you’ll be there in person or tuning in remotely, I’d love to connect! We’ll be presenting our paper on pre-training stability in language models and the PolyPythias 🧵 🔗 ArXiv: arxiv.org/abs/2503.09543 🤗 PolyPythias: huggingface.co/collections/El…

✨New pre-print✨ Crosslingual transfer allows models to leverage their representations for one language to improve performance on another language. We characterize the acquisition of shared representations in order to better understand how and when crosslingual transfer happens.

Interested in a paid PhD position in the Netherlands? Join me, Annemarie van Dooren, and @AriannaBisazza on a project to develop computational models of modal verb acquisition. A job ad will be out soon, but here's a preview: yevgen.web.rug.nl/phd.pdf

We are expecting🫄 A 3rd BabyLM👶, as a workshop @emnlpmeeting Kept: all New: Interaction (education, agentic) track Workshop papers More in 🧵

These grammatical concepts are real because they compress the data in a way that allows understanding and causal manipulation, not because they correspond 1:1 to some hardwired mental/neural representation.

🚀 Curious how to design, build, deploy, and scale a modern AI chat app with full control over the language model? Check out my new 2-part blog series + code! Part 1: Build microservices locally towardsdatascience.com/designing-buil… Part 2: Deploy to Azure towardsdatascience.com/designing-buil… 🧵👇 1/4

This is the most gut-wrenching blog I've read, because it's so real and so close to heart. The author is no longer with us. I'm in tears. AI is not supposed to be 200B weights of stress and pain. It used to be a place of coffee-infused eureka moments, of exciting late-night arxiv…

Together with amazing collaborators, we released a series of short educational videos on YouTube to answer 6 key questions about LLM Interpretability (🧵) Hope this is useful to people!

BlackboxNLP has started with our first keynote by Jack Merullo! Come join us in the Jasmine room to learn about his talk on "Simple Mechanisms Underlying Complex Behaviors in Language Models".