jianlin.su

@Jianlin_S

Grad is all you need @Kimi_Moonshot Blog: https://jianlin.su , Cool Papers: https://papers.cool

kexue.fm/archives/11006 introduces the idea of using matrices and their msign to perform general operations on the singular values, including singular value clipping, step functions, and arbitrary polynomials (not just odd polynomials). @leloykun @YouJiacheng @_arohan_

kexue.fm/archives/11175 Extend the last article to calculate any G P^{-s/r}

kexue.fm/archives/11158 a pretty method for solving P^{1/2}, P^{-1/2} and GP^{-1/2}, reusing the coefs of msign.

🚀 Hello, Kimi K2! Open-Source Agentic Model! 🔹 1T total / 32B active MoE model 🔹 SOTA on SWE Bench Verified, Tau2 & AceBench among open models 🔹Strong in coding and agentic tasks 🐤 Multimodal & thought-mode not supported for now With Kimi K2, advanced agentic intelligence…

Discuss efficient inversion of matrices of the form (Λ + QK.T * M), which commonly arise in modern linear attention mechanisms. kexue.fm/archives/11072

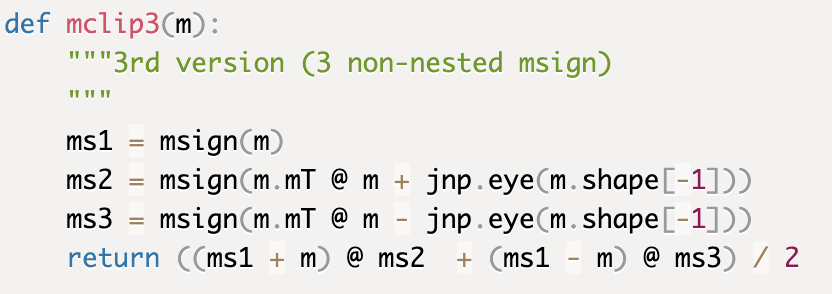

The latest method to calculating mclip (3 non-nested msign, very low error): kexue.fm/archives/11059 @leloykun @YouJiacheng @_arohan_

The Linear Attention Odyssey: From Imitation to Innovation and Back kexue.fm/archives/11033

kexue.fm/archives/11025 Discussed the derivative calculation of the msign operator. If you are interested in the combination of “TTT + Muon” like arxiv.org/abs/2505.23884 , this might be helpful to you.

kexue.fm/archives/10996 The latest progress in finding better Newton-Schulz iterations for the msign operator. It directly derives the theoretical optimal solution through the equioscillation theorem and greedy transformation. Original paper: papers.cool/arxiv/2505.169…

kexue.fm/archives/10972 This article introduces the Equioscillation Theorem for polynomial best approximation, as well as the problem of differentiation of the infinity norm related to it.

kexue.fm/archives/10958 This article centers on the recently released MeanFlow and discusses the acceleration of diffusion model generation from the perspective of “average velocity.”

kexue.fm/archives/10945 Shared Expert and Fine-Grained Expert in MoE.

Exploring the Magic Behind MLA: Why Is It So Effective? kexue.fm/archives/10907 - Larger head_dims = better performance? - Partial RoPE = secret sauce? - KV-Shared = added boost? MLA's success may lie in its unique combination of these elements. 🚀 #MLA