Hao Sun - RL @ACL 🇦🇹

@HolarisSun

4th year PhD Student at @Cambridge_Uni. IRL x LLMs. Superhuman Intelligence needs RL, and LLMs help human to learn from machine intelligence.

I have been working on Reward Modeling (and Inverse RL) for LLMs for the past 1.5 years. We built reward models (RMs) for prompting, dense RMs to improve credit assignment, and RMs from the SFT data However, many questions remained unclear to me until this paper was finished.🧵

🚀 RL is powering breakthroughs in LLM alignment, reasoning, and agentic apps. Are you ready to dive into the RL x LLM frontier? Join us at @aclmeeting ACL’25 tutorial: Inverse RL Meets LLM Alignment this Sunday at Vienna🇦🇹(Jul 27th, 9am) 📄 Preprint at huggingface.co/papers/2507.13…

This is SCIENCE🚀!!!

Our new ICML 2025 oral paper proposes a new unified theory of both Double Descent and Grokking, revealing that both of these deep learning phenomena can be understood as being caused by prime numbers in the network parameters 🤯🤯 🧵[1/8]

Now with Qwen’s RL-fine-tuning results, are we witnessing a quiet return of prompt optimization/engineering? Now we have a 2-player game: users become “lazy prompters”, but the system prompts (e.g. thinking patterns) need to be highly optimized. Next: Bi-level optimization?

"Knowledge belongs to humanity, and is the torch which illuminates the world." — Louis Pasteur Especially for those contributed by the community.

AI cannot feel time, then how can it really understand humans?

📢New Paper on Process Reward Modelling 📢 Ever wondered about the pathologies of existing PRMs and how they could be remedied? In our latest paper, we investigate this through the lens of Information theory! #icml2025 Here’s a 🧵on how it works 👇 arxiv.org/abs/2411.11984

Happy to share that our paper on "Active Reward Modeling" has been accepted to ICML 2025! #ICML2025 The part I like the most about the project is its simplicity! Huge thanks to my amazing co-authors @ShenRaphael @HolarisSun More to come! For more detailed 🧵 see 👇

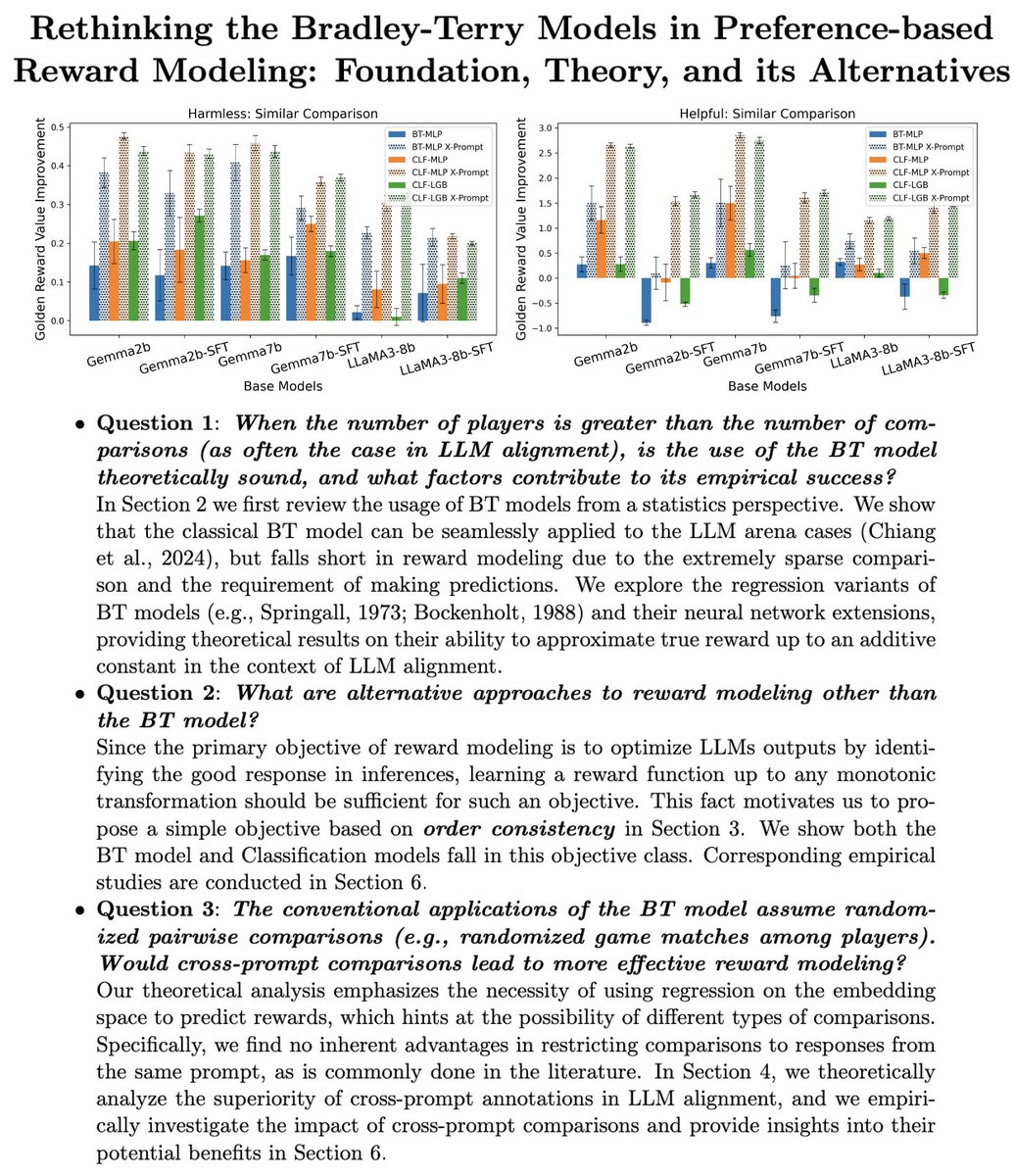

📢New Paper on Reward Modelling📢 Ever wondered how to choose the best comparisons when building a preference dataset for LLMs? Our latest paper revives classic statistical methods to do it optimally! Here’s a 🧵on how it works 👇 arxiv.org/abs/2502.04354

OpenReview Justice!

I'm honestly a bit surprised but whatever! Worth celebrating arxiv.org/abs/2502.04354 Here is our arxived paper. With @HolarisSun and @jeanfrancois287 .

Glad to be there with @HolarisSun presenting our work openreview.net/forum?id=rfdbl…

Heading to 🇸🇬ICLR next week! Can’t wait to catch up with old friends and meet new ones — let’s chat about RL, reward models, alignment, reasoning, and agents! Also, fun fact🤓: Yunyi won’t be there physically, but his digital twin will be attending instead. Stay tuned!

ICLR wrapped! Eggie and Toastie said it was the BEST🥰

The oral sessions and poster sessions are happening at the same time, so it actually feels like the oral speakers are just talking to each other🤣

@HolarisSun is famous now!

Heading to 🇸🇬ICLR next week! Can’t wait to catch up with old friends and meet new ones — let’s chat about RL, reward models, alignment, reasoning, and agents! Also, fun fact🤓: Yunyi won’t be there physically, but his digital twin will be attending instead. Stay tuned!