Mark Histed-🧠 Lab

@HistedLab

How brain neural nets do computations to process info. Bearish on AI taking over the world, bullish on neuro advances via understanding AI. Pers. views.

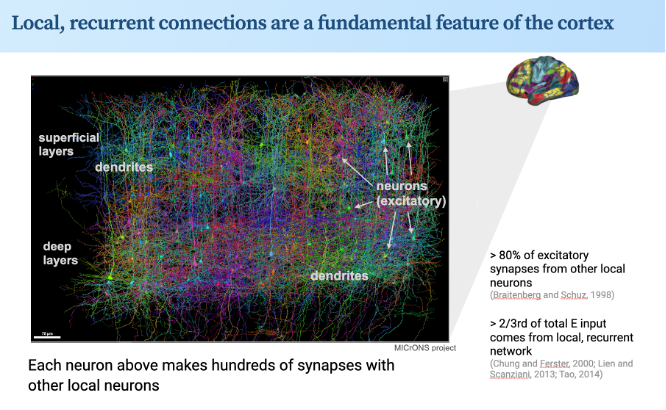

New preprint, on 'sequence filtering'. Led by @CianaDeveau, Z Zhou. We see this as a key step forw on how cortex works. All cortical areas have dense exc-exc recurrent connectivity. What do these connections do, esp in sensory ctx? Our data say: they do dynamics/time. 1/4

noooooo

Wasn't paid. I helped him find pants & shoes for free bc I appreciate he donated to charity. But I think he looks good. Main thing I wanted to avoid was the slim fit, calf gripping chino that has defined so many aging Millennials who pair them with dress shirts and white sneakers

Every time I see the world “delve” now I cringe. For now human brains are still better at learning the stat structure of the world

New paper that looked at 15 million biomedical abstracts and found specific words that abruptly increased in frequency in 2024, likely due to the authors using LLMs. Take home message: don't use the word "Delve" science.org/doi/10.1126/sc…

Here’s the extended video from CBS today. Many thanks to Dr. Rosenberg and the patients in this video like the Callahans and Natalie Phelps. It’s not easy to speak out. Best of luck to them in their fight against cancer. youtube.com/watch?app=desk…

Seems especially bad given that there is an ongoing destruction of cancer research in America. Sorry for your friend @AnnieLowrey, and all the best to her.

Right. This is what I mean when I say AI has no notion of truth (or what’s right). It’s pure statistics of language tweaked with slight statistical feedback about right answers. That seems fundamentally different from how human brains work.

Reproduced after creating a fresh ChatGPT account. (I wanted logs, so didn't use temporary chat.) Alignment-by-default is falsified; ChatGPT's knowledge and verbal behavior about right actions is not hooked up to its decisionmaking. It knows, but doesn't care.

This doesn’t seem to me to invalidate the reasoning approach. Yes, the reasoning models apply similar computations as the prior models but in steps. Did we think something different was happening?

BREAKING: Apple just proved AI "reasoning" models like Claude, DeepSeek-R1, and o3-mini don't actually reason at all. They just memorize patterns really well. Here's what Apple discovered: (hint: we're not as close to AGI as the hype suggests)

The first letter of the genetic code was cracked #OTD in 1961 at 3am by Marshall Nirenberg (right) and Heinrich Matthaei (left) at the @NIH. Nirenberg shared the 1968 medicine prize with Har Gobind Khorana and Robert Holley for their work on the genetic code. #NobelPrize