ℏεsam

@Hesamation

ai engineer | rigorously overfitting on a learning curve

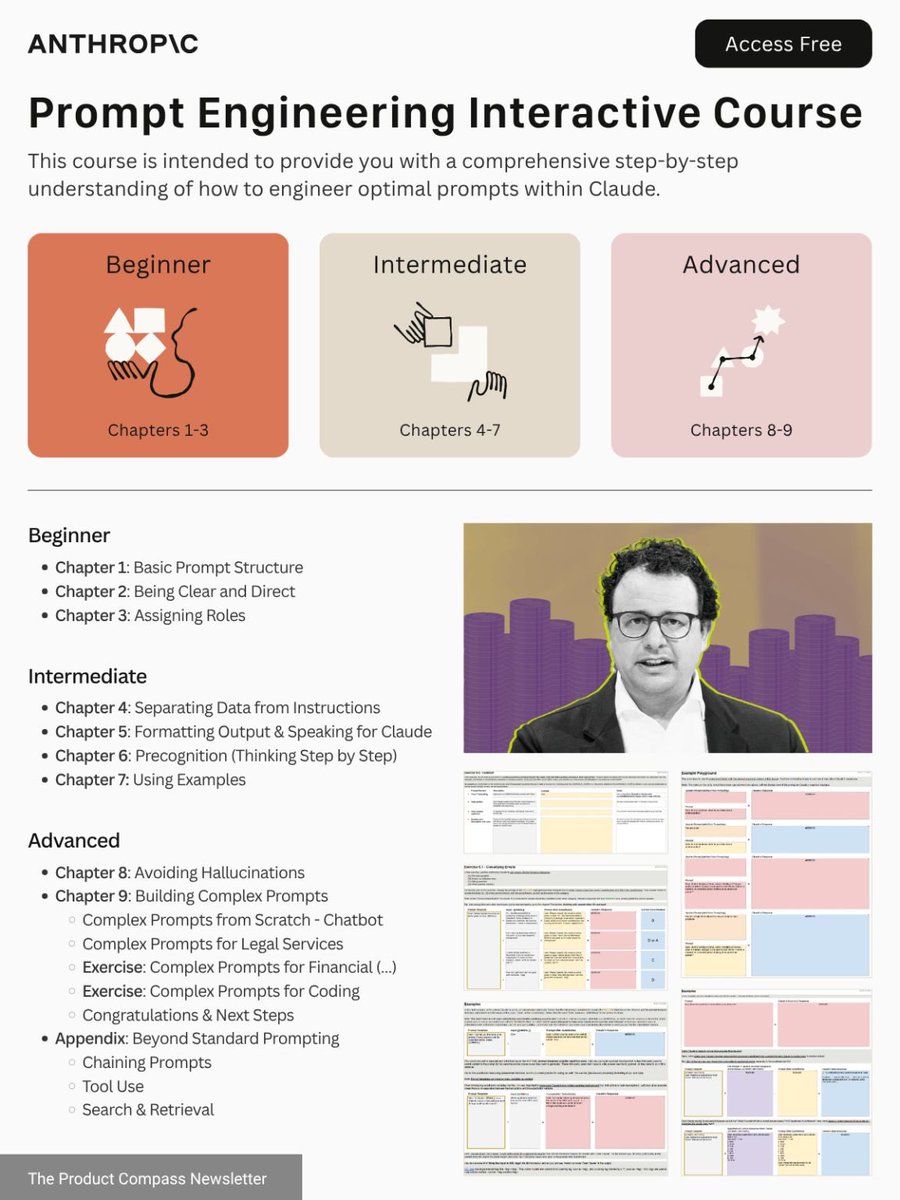

Anthropic released a free interactive course on prompt engineering, an essential skill for everyone. > how to structure a good prompt > avoiding common pitfalls > making a killer prompt library > 80/20 rules and addressing them It has 9 step-by-step chapters with exercises.

the legendary @danielhanchen just made a full 3-hour workshop on reinforcement learning and agents. he goes through RL fundamentals, kernels, quantization, and RL+Agents covering both theory and code. great video to get up to speed on these topics.

i still can’t believe adding “think step by step” to the end of a prompt has a prompt name and a paper with 17K citations.

some good points on why ai/ml might not be for you: > you want to learn everything too fast > can’t handle insane competition > you don’t learn consistently > blame job market instead of locking in > you need micro management

this cookbook of context engineering, is the most comprehensive repo i've seen that gathers around all the major topics about this new hot topic. for deeper read it links related papers to major topics such as memory systems, context persistence, RAG and so much more.

all you need is a group of locked in friends like this and the willingness to sell your soul.

the academia’s relationship with ai is so toxic, students have to write stupid code and make intentional typos and mistakes in their report just to prove that they actually did the assignment themselves. essentially you have to be worse than 5 years ago to dodge accusations.

Naive RAG Graph RAG Agentic RAG Fusion RAG Multimodal RAG every month a new one pops up and it’s just a dumb vector database goddamit

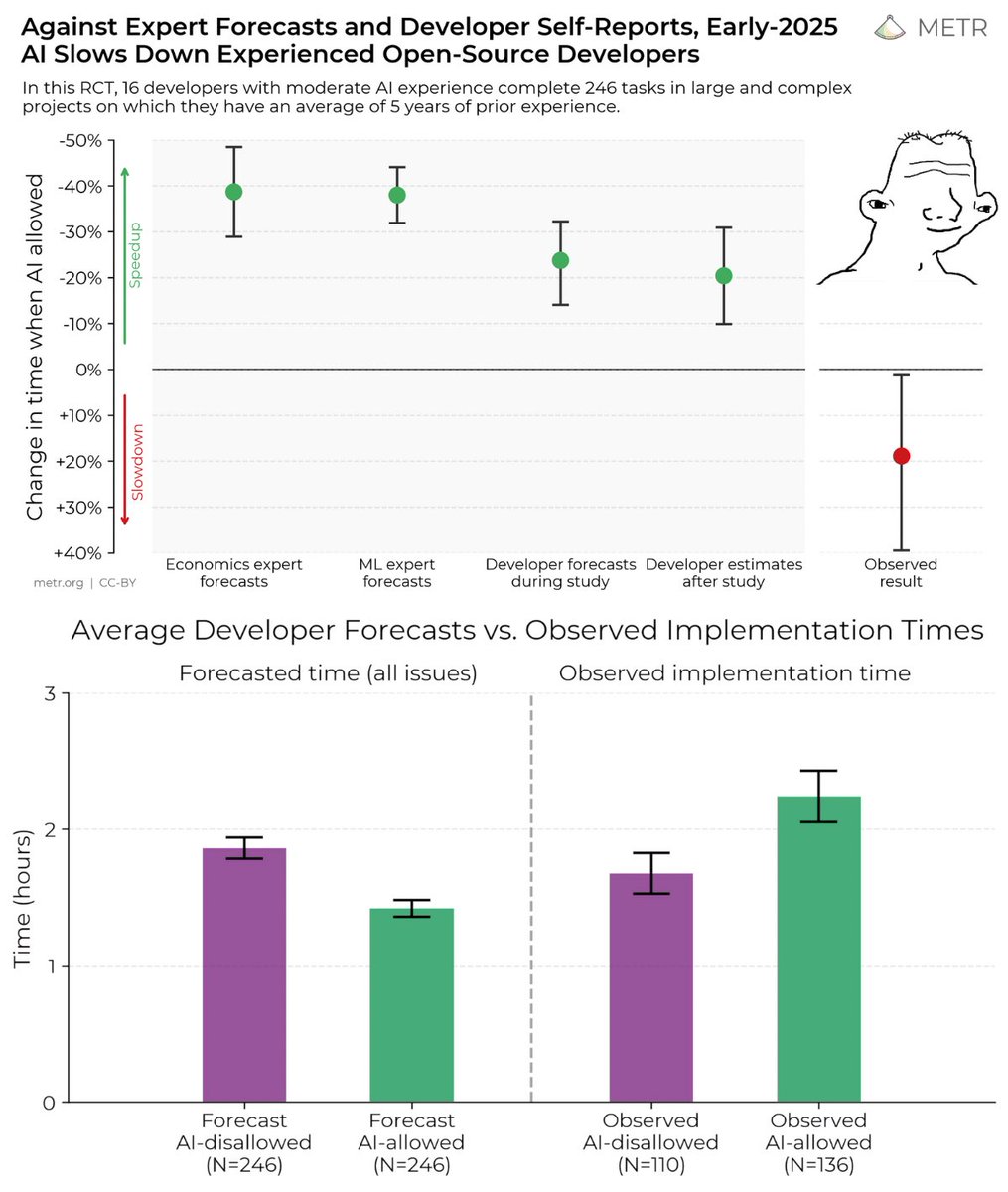

they tested 16 open source developers with and without AI. > they expected a 24% speedup > AI slowed them down by 19% > they still believed they were 20% faster yeah, pretty interesting…

"Our results reveal that models do not use their context uniformly; instead, their performance grows increasingly unreliable as input length grows" wait... didn't we already know this like 2 years ago? are we being gaslighted?