Haopeng Wang

@HaopengWang725

Ph.D student, HCI researcher, Drummer.

Excited to present our work HeadShift: Head Pointing with Dynamic Control-Display Gain at #CHI2025 in Yokohama! Mon, 4:56–5:08 PM, Room G301 🎤 A big thank you to my amazing co-authors: @ludwigsidenmark, Florian Weidner, Joshua Newn, and @HansGellersen! See you there! 🙌

Presented my PhD research on “Understanding and Leveraging Head Movement as Input in Interactions” in #IEEEVR 2025 Doctoral Consortium. 🌊

Excited to announce our latest TOCHI publication: "HeadShift: Head Pointing with Dynamic Control-Display Gain" with @ludwigsidenmark @FlorianWeidner @JoshuaNewn @HansGellersen dl.acm.org/doi/10.1145/36…

So happy to introduce Hans Gellersen as new inductee to the SIGCHI Academy - congratulations @HansGellersen

📢ETRA community members in @LancasterUni are organizing GEMINI Workshop on Gaze, Gestures, and Multimodal Interaction. 🗓️ When: June 3rd 2024 📍Where: Lancaster University 👉Register now: gemini-erc.eu/gemini2024work… 🗒️Register before May 24th (no registration fee) @HansGellersen

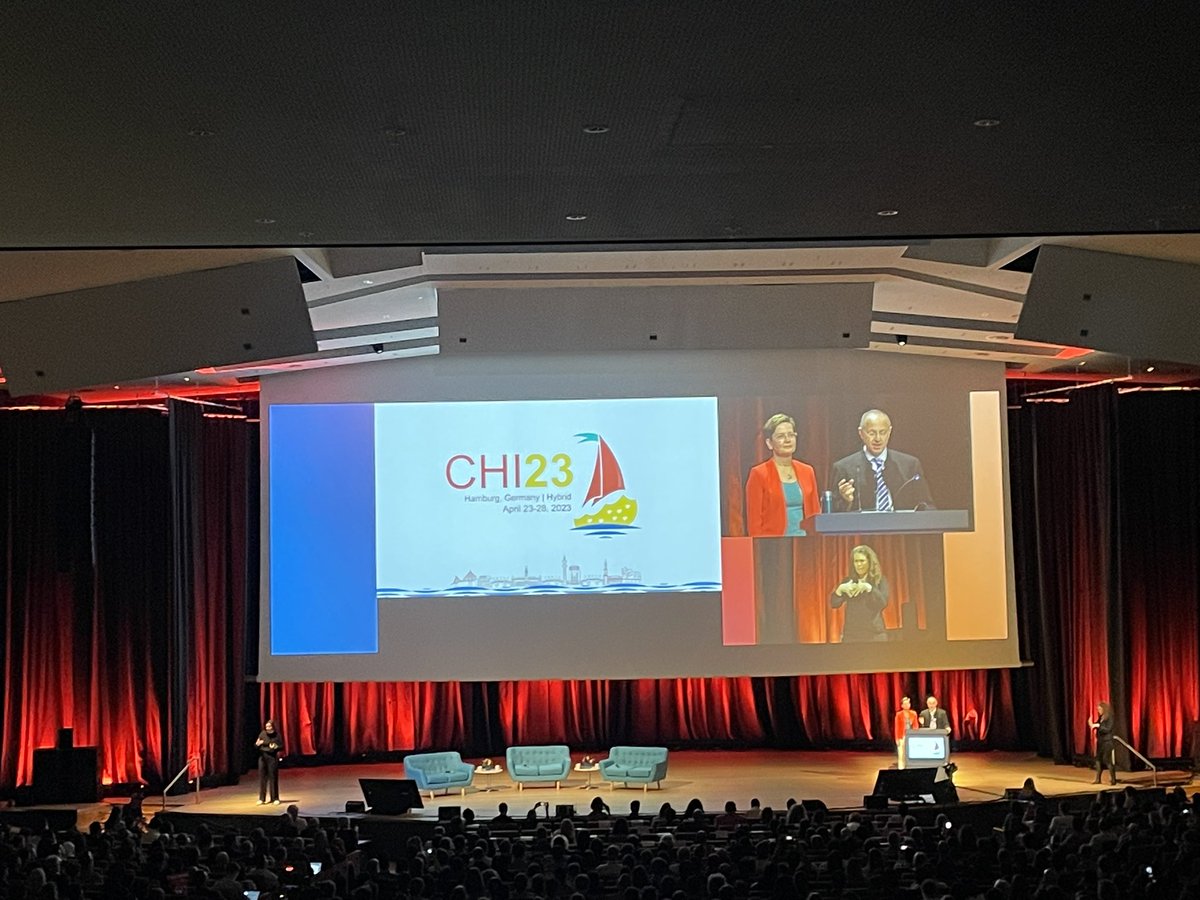

@ludwigsidenmark presenting his work on Vergence Matching at #CHI2023 this work is in collaboration with @ChrisOfClarke @JoshuaNewn and @HansGellersen more information in the paper: dl.acm.org/doi/10.1145/35…

Uta Wagner presenting her work "A Fitts' Law Study of Gaze-Hand Alignment for Selection in 3D User Interfaces" at #CHI2023 this work is in collaboration with @KenPfeuffer and @HansGellersen. More information in the paper: dl.acm.org/doi/10.1145/35…

@JamesBHou presenting his work "Classifying Head Movements to Separate Head-Gaze and Head Gestures as Distinct Modes of Input" at #CHI2023 this work is in collaboration with @ludwigsidenmark,@JoshuaNewn , @HansGellersen. More information in the paper:dl.acm.org/doi/10.1145/35…