Fabian Schaipp

@FSchaipp

working on optimization for machine learning. currently postdoc @inria_paris. sbatch and apero.

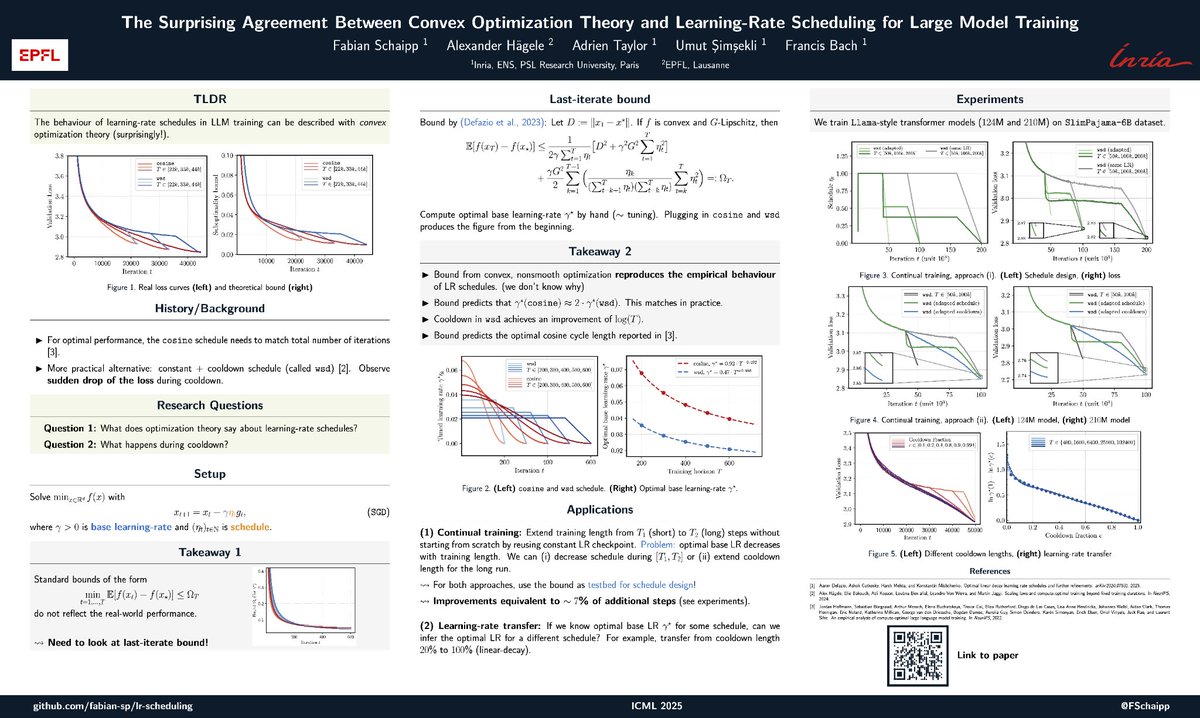

Learning rate schedules seem mysterious? Turns out that their behaviour can be described with a bound from *convex, nonsmooth* optimization. Short thread on our latest paper 🚇 arxiv.org/abs/2501.18965

The sudden loss drop when annealing the learning rate at the end of a WSD (warmup-stable-decay) schedule can be explained without relying on non-convexity or even smoothness, a new paper shows that it can be precisely predicted by theory in the convex, non-smooth setting! 1/2

FYI Adam will be on holiday the entire august

Anyone knows adam?

still holds in 2025

#ICML2024 oral but still every speaker needs to connect his own laptop for the slides

Best tutorials are those that do not only promote the speakers' own work. #ICML2025

🚡 Come check out our poster on understanding LR schedules at ICML. Thursday 11am.

Pogacar didnt have a bad day since TdF 2023, stage 17. Quite astonishing #TDF2025

A paper that contains both the words "sigma-algebra" and "SwiGLU activations" ☑️ Also interesting results on embedding layer LRs.

We uploaded V3 of our draft book "The Elements of Differentiable Programming". Lots of typo fixes, clarity improvements, new figures and a new section on Transformers! arxiv.org/abs/2403.14606

is it allowed to write papers on μP only subject to using the most un-intuitive notation?

what are the best ressources for training and inference setup in diffusion models? ideally with (pseudo-)code

Optimization is the natural language of applied mathematics.

now accepted at #ICML 2025! ☄️

Learning rate schedules seem mysterious? Turns out that their behaviour can be described with a bound from *convex, nonsmooth* optimization. Short thread on our latest paper 🚇 arxiv.org/abs/2501.18965

biggest tech improvement in a while: my (android) phone can now open arxiv pdfs in the browser without downloading them 📗