Dmitry Rybin

@DmitryRybin1

PhD at CUHK || ML for Math, Search, Planning || Grand First Prize at IMC || 人工智能+数学

We discovered faster way to compute product of matrix by its transpose! This has profound implications for data analysis, chip design, wireless communication, and LLM training! paper: arxiv.org/abs/2505.09814 The algorithm is based on the following discovery: we can compute…

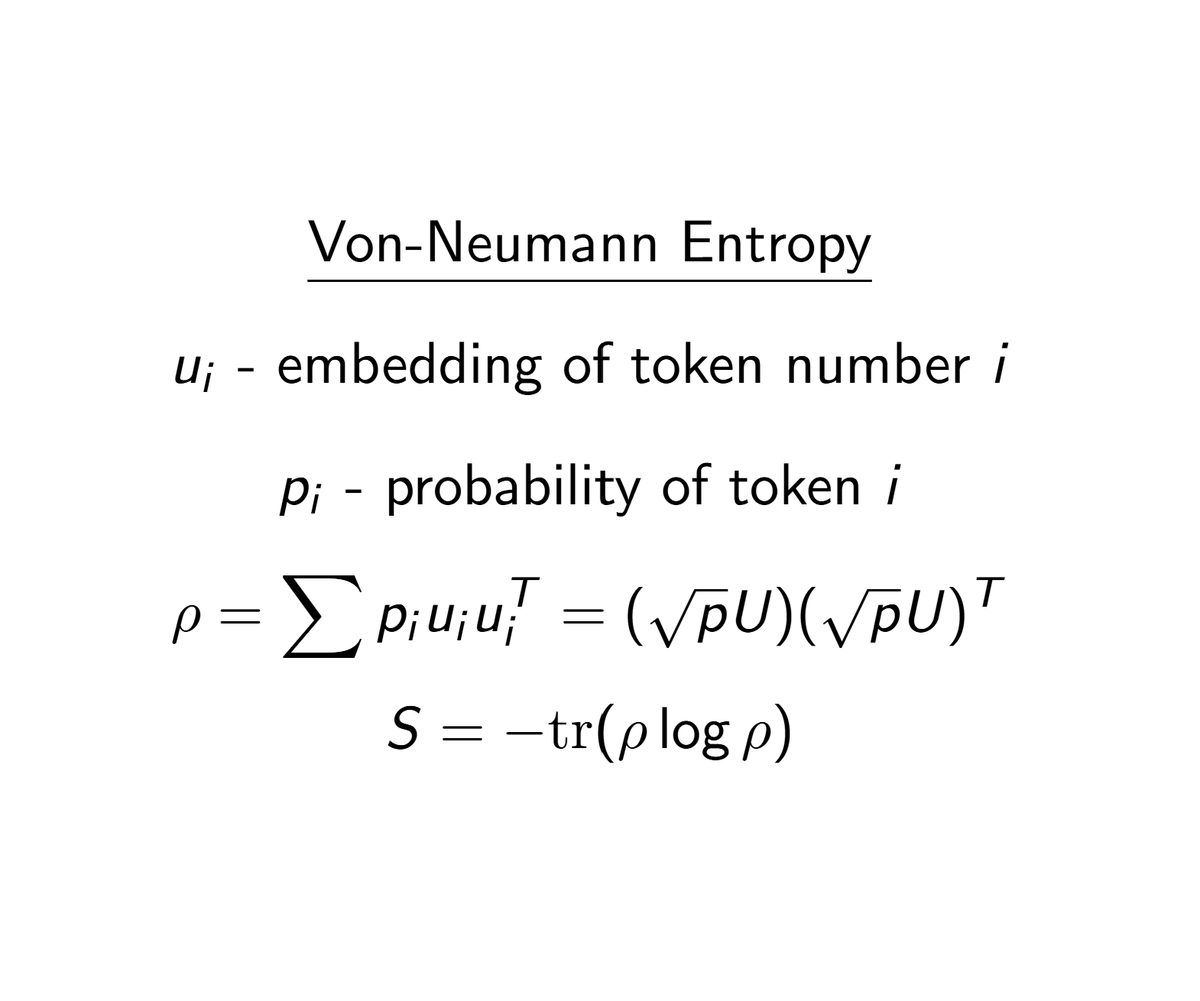

RL+LLM researchers actively use LLM distribution Entropy to measure training dynamics. This number is misleading. John Von-Neumann and Lev Landau gave us the correct answer 100 years ago while studying mixed quantum states in Hilbert spaces. Usual Entropy treats all tokens as…

International Math Competition for Undergrads is in 6 days. 60%+ of participants are IMO medalists. Would be nice to see DeepMind and OAI performance there @alexwei_ @lmthang

Math Evals that go beyond IMO: - Putnam - International Math Competition (aka undergrad IMO) - Alibaba Global Math Competition - latest arXiv lemmas and thrms - latest homeworks from Independent University of Moscow and other world-class departments

Math Evals that go beyond IMO: - Putnam - International Math Competition (aka undergrad IMO) - Alibaba Global Math Competition - latest arXiv lemmas and thrms - latest homeworks from Independent University of Moscow and other world-class departments

If i will have at hand @GoogleDeepMind tools like AlphaEvolve, Deep Think, AlphaProof, AlphaTensor, i would be able to immediately resolve many open problems in CS, combinatorics, graph theory, matrix multiplication (e.g. see our result on accelerating XX^T, and we have much more…

Gemini DeepThink, our theorem proving agent, AlphaProof, and algorithm discovering agent, AlphaEvolve will be powerful tools for mathematicians in their pursuit to advance human knowledge.

Having a deep think…

Official results are in - Gemini achieved gold-medal level in the International Mathematical Olympiad! 🏆 An advanced version was able to solve 5 out of 6 problems. Incredible progress - huge congrats to @lmthang and the team! deepmind.google/discover/blog/…

Every working mathematician and computer scientist should read this carefully

Today, we at @OpenAI achieved a milestone that many considered years away: gold medal-level performance on the 2025 IMO with a general reasoning LLM—under the same time limits as humans, without tools. As remarkable as that sounds, it’s even more significant than the headline 🧵

Gemini-2.5-pro is really impressive It steadily gets full score on extremely difficult Problem 3 from IMO 2025 released 2 days ago. This problem is usually only solved by ~50 highschool students in the world

We just released the evaluation of LLMs on the 2025 IMO on MathArena! Gemini scores best, but is still unlikely to achieve the bronze medal with its 31% score (13/42). 🧵(1/4)

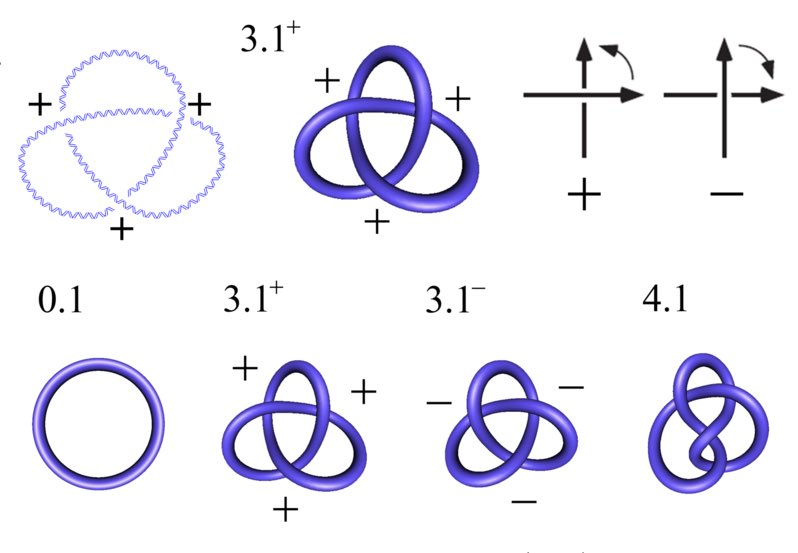

One time i was riding a subway in China and this 13yo kid found out i know math and started quizzing me on topological invariants of knots 😭 What do you mean i need to prove Chirality of trefoil knot? 🥲

I observed this too. For integer initializations and certain sizes Strassen-like algorithms for matmul drastically outperformed baselines. That’s why I always test on random normal data thonking.ai/p/strangely-ma…

Such data should be very useful A bit confusing to me why Gemini 2.5 is almost human expert level. Perhaps stratification by problem difficulty should reveal more Tbh never seen a case of a wrong human judging in Russian National Math Olympiads

Thrilled to share a major step forward for AI for mathematical proof generation! We are releasing the Open Proof Corpus: the largest ever public collection of human-annotated LLM-generated math proofs, and a large-scale study over this dataset!

Would like to share some recent numerical results, which might draw interest from the theory community. We visualized >100 Hessian matrices of DNNs and tracked how they evolve along training. Here are the key messages: (1) the Hessian is highly structured at initialization,…

Very insightful talk by Demis Hassabis at IAS! I didn’t know DeepMind is working on room temperature superconductors! youtu.be/TgS0nFeYul8

Big kudos to Vladimir @shitov_happens for implementing our new X*X^T algorithm in CUDA with no extra memory! I tried and it is already faster than cublas syrk/gemm in some setups: 7% faster for 16k x 16k matrices in FP32 on RTX 4090. And comparable in other: 1-2% slower than…

We discovered faster way to compute product of matrix by its transpose! This has profound implications for data analysis, chip design, wireless communication, and LLM training! paper: arxiv.org/abs/2505.09814 The algorithm is based on the following discovery: we can compute…

Google DeepMind has all the tools, like AlphaProof, to start a new era of scientific LLM benchmarking - test LLMs on open math problems. And they are getting ready: github.com/google-deepmin…

Thank you LinkedIn influencer, but it’s not DeepMind, it’s me