CoreWeave

@CoreWeave

The AI Hyperscaler™

Episode 2 of our AI Cloud Horizons webcast series is dropping next week! Check out a sneak peak from the conversation between @CoreWeave's Jacob Feldman and John Mancuso below. Sign up here for early access to our episodes: hubs.la/Q03yhGzq0 #AICloudHorizon #CoreWeave #SLA

CoreWeave has built every layer of our platform with a singular goal in mind: helping our customers unlock maximum value from their infrastructure. Learn from @GoldbergChen and @l2k how The CoreWeave Cloud Advantage can accelerate your AI innovations ➡️ eventmobi.com/website/corewe…

🚨 New @Alibaba_Qwen model drop: Qwen3-Coder & Qwen3-2507 are now both live in W&B Inference! (unbeatable prices) @JustinLin610 and team really cooked. 🔥 Here's the scoop on these new open SOTA models and how you can get $20 of W&B credits to try them out yourself. 👇

Kimi K2 is now live! Try it out for yourself on W&B Inference, powered by CoreWeave ⚡️

NEW: Kimi K2 is now live on W&B Inference by @CoreWeave! It's the first truly open challenger, ready for production with no waitlists, no restrictions, and SOTA performance. Here's why we think it's larger than the DeepSeek moment and how to claim $50 in inference credits. 🧵

Our Chief of Staff Paddy Woods recently joined leaders at The Global Game Summit, hosted by @GK_ventures & Harris Blitzer Sports & Entertainment, to explore how AI is transforming soccer. Special thanks to @GovMurphy & @NJEDA for championing these discussions and NJ as an AI hub.

As a major milestone in our growing infrastructure network, we’re announcing our intent to commit more than $6B to build a 100MW state-of-the-art AI data center in Lancaster, PA, with the potential to expand up to 300MW. The facility will create jobs, drive regional growth, and…

On @BloombergTV, our CEO Mike Intrator told @CarolineHydeTV that we're gaining control of "how we build, where we build, when we build" with our recent acquisition of @Core_Scientific. Watch the full interview: hubs.la/Q03w-0yj0

CoreWeave is the first to launch @NVIDIA #RTXPRO 6000 Blackwell Server Edition cloud instances! 📷️With this exciting new technology, you can experience up to 5.6x faster LLM inference and 3.5x faster text-to-video than L40S. Get all the details in our new blog post:…

Our CEO Mike Intrator joined @jimcramer on @MadMoneyOnCNBC to discuss our acquisition of @Core_Scientific and how it strengthens our position as a leader in AI infrastructure, extending our stack from hardware to software. Watch here: hubs.la/Q03wgZHz0

Today is an exciting day for CoreWeave! We just announced an agreement to acquire @Core_Scientific, solidifying our position as the leading AI Cloud Platform—purpose-built to deliver unmatched performance and expertise for the AI era. 🚀 coreweave.com/news/coreweave… #PoweringAI

We’re the first cloud provider to bring up the @NVIDIA GB300 NVL72, delivering up to 50x inference throughput and 10x user responsiveness for next-gen AI. Built in collaboration with @Dell and @Switch. The future of AI is now. 🔗coreweave.com/blog/coreweave…

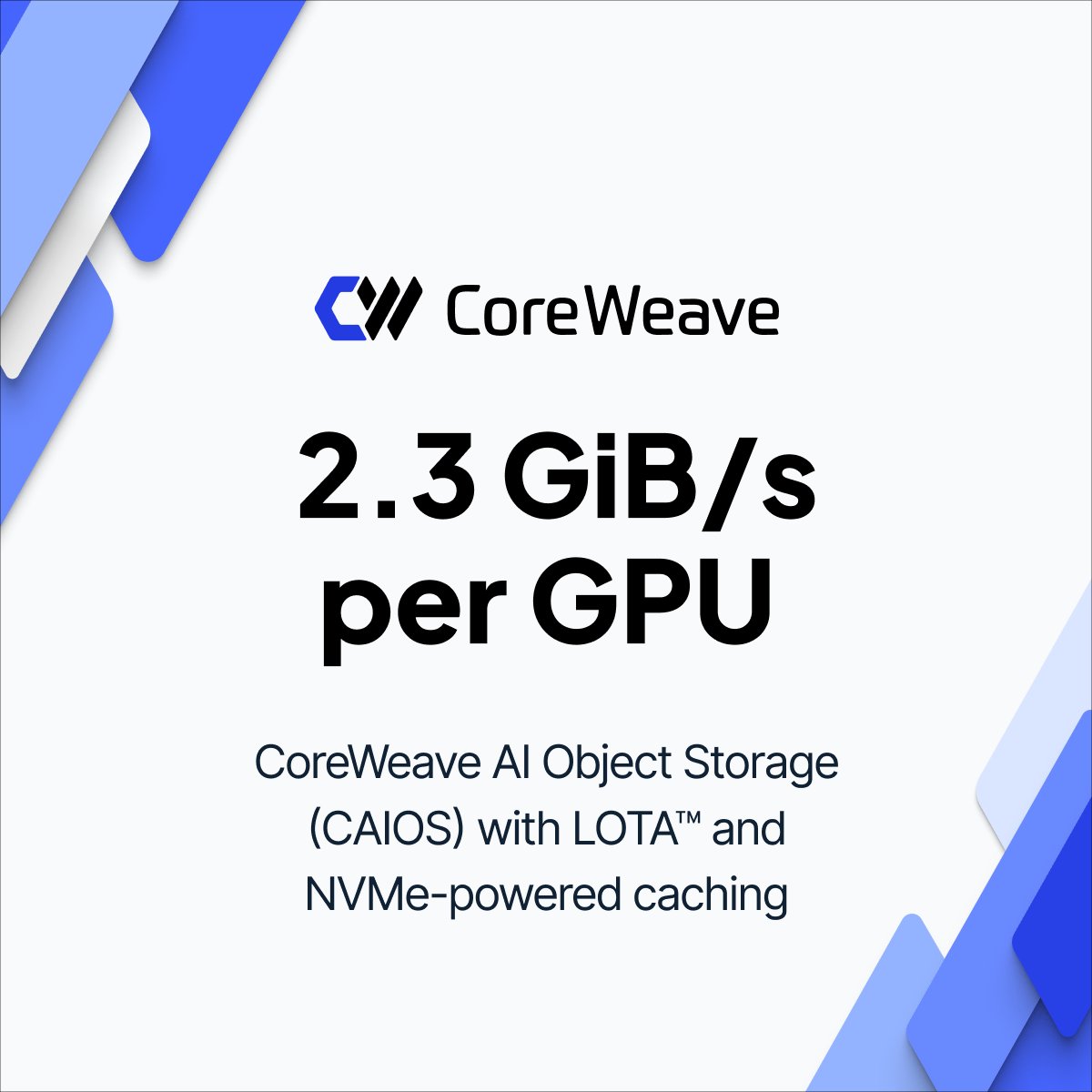

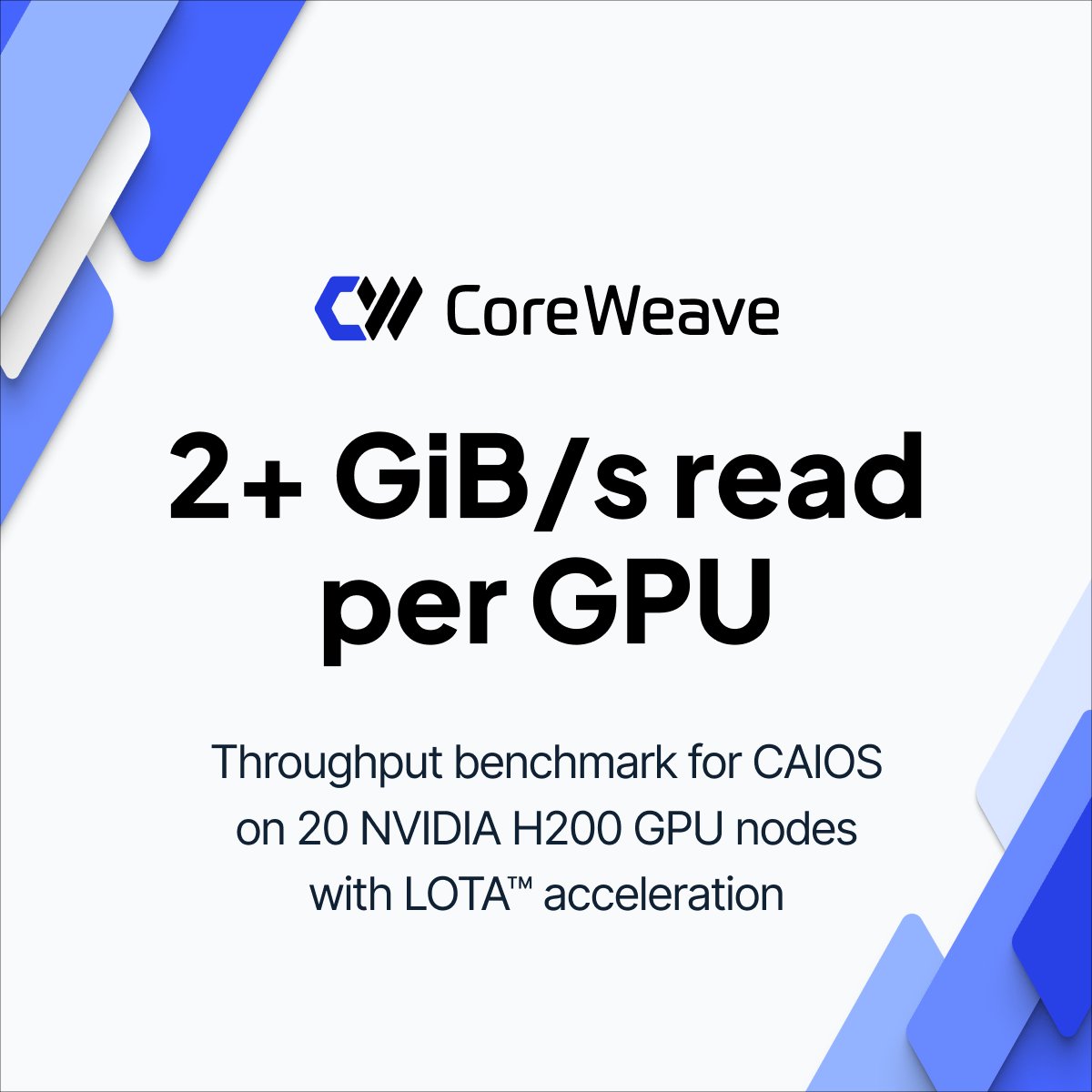

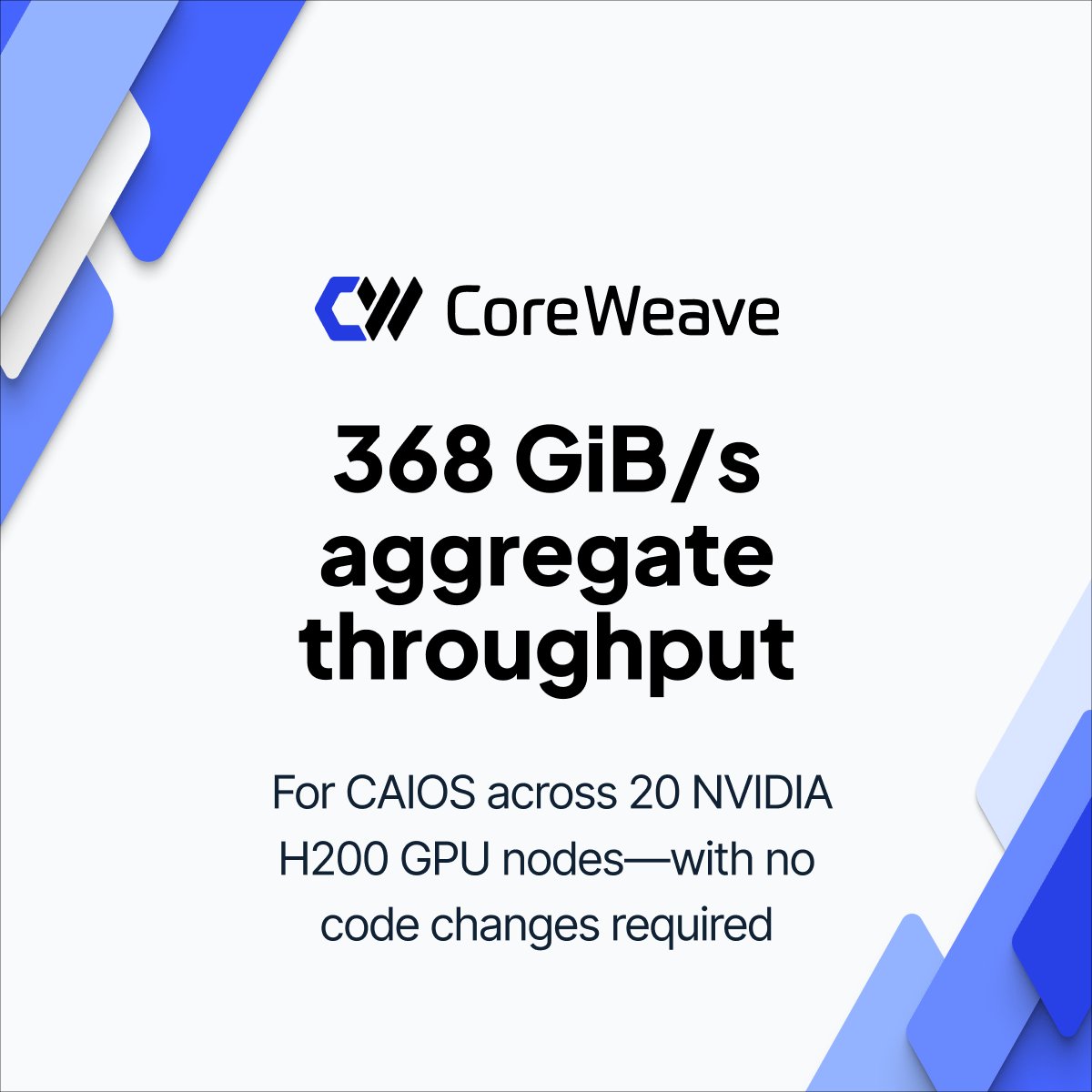

Here’s why @CoreWeave AI Object Storage changes the game: • 2 GB/s per GPU throughput • Exabyte-scale capacity • Encryption & fine-grained access control Your data. Your bucket. Maximum speed. Set it up in minutes: docs.wandb.ai/guides/hosting…

That's a wrap on our virtual summit with @NVIDIA! If you missed the event yesterday, click the link below to access the sessions with executives from some of the world's leading AI companies ⚡ Check it out here: hubs.la/Q03v4Ysp0 #CoreWeave #AI #virtualsummit

🚀 Accelerate your #AI innovation with the AI Innovation Virtual Summit on June 26, hosted with @CoreWeave. Hear from industry leaders, technical experts, and customers like @IBM and @OpenAI sharing real-world strategies and success stories. ➡️ nvda.ws/44hvKXu

It’s the final countdown to @CoreWeave x @nvidia Accelerating AI Innovation Virtual Summit! ⏰ Claim your front-row seat to bold ideas, exclusive insights, real-world success stories, and a look into the future of AI infrastructure. Register here 👉 hubs.la/Q03tFphd0

Training today’s largest AI models requires more than just compute, it demands storage that can keep up. CoreWeave AI Object Storage delivers just that: 2.3 GiB/s per GPU, thanks to our Local Object Transport Accelerator (LOTA™) Read more in our blog: hubs.la/Q03tDZhl0

This Thursday, hear how industry leaders like @OpenAI, @MistralAI and @IBM are leveraging CoreWeave to push the boundaries of AI innovation. Grab your virtual ticket for @CoreWeave and @nvidia's virtual summit here: hubs.la/Q03tqWC20