Cleanlab

@CleanlabAI

Cleanlab makes AI agents reliable. Detect issues, fix root causes, and apply guardrails for safe, accurate performance.

Still think LLMs can’t reason? That’s getting harder to believe with today’s new o3-mini model. But even with o3-mini, these models can definitely still hallucinate… Automatically score the trustworthiness of responses from any model with TLM, now including o3-mini!

Let's build a "Chat with your Code" RAG app using Qwen3-Coder:

AI agents don’t just fail from hallucinations. They fail when tool calls go wrong—wrong tool, bad input, skipped step. We dropped a new tutorial to score tool calls for trust so you can catch failures early, before they hit users. 👉 help.cleanlab.ai/tlm/tutorials/…

🤖 🛡️ Cleanlab Trust Scoring Cleanlab's powerful trust scoring system prevents AI hallucinations in customer support, seamlessly integrating with LangGraph to detect and block problematic responses before reaching users. Explore the technical implementation here:…

🤖 Building with @OpenAI’s Agents SDK? This new tutorial shows how to catch low-trust outputs before they reach customers. • Auto-handle incorrect AI responses • Prevent failures in multi-agent handoffs • Improve reliability without retraining 👉 help.cleanlab.ai/tlm/use-cases/…

@MITSTEX startup spotlight: @CleanlabAI MIT startup @Cleanlab partners with @nvidia to tackle the biggest problem in Enterprise AI: outputs you can trust. Full story: developer.nvidia.com/blog/prevent-l…

How to build support agents that are safe, controllable, work, and keep you out of the news. Use @CleanlabAI directly integrated with @LangChainAI. Cleanlab is the most integrated and most accurate real-time safety/control layer for Agents/RAG/AI.

🛑Prevent Hallucinated Responses Our integration with @CleanlabAI allows developers to catch agent failures in realtime To make this more concrete - they put together a blog and a tutorial showing how to do this for a Customer Support agent Blog: cleanlab.ai/blog/prevent-h…

🛑Prevent Hallucinated Responses Our integration with @CleanlabAI allows developers to catch agent failures in realtime To make this more concrete - they put together a blog and a tutorial showing how to do this for a Customer Support agent Blog: cleanlab.ai/blog/prevent-h…

We’re on a quest to make customer support chatbots more trustworthy. 🤖 Our new case study with @LangChainAI shows how to catch hallucinations and bad tool calls in real time using Cleanlab trust scores. LangGraph fallbacks make fixing them easy 👇 cleanlab.ai/blog/prevent-h…

Singapore Government just dropped the Responsible AI Playbook - not just talk, but actual technical guidance for deploying AI systems safely. Their key recommendations: - LLMs are like "Swiss Cheese" - full of unpredictable capability holes. - Guardrails for reliable LLM apps…

We asked the Databricks AI + Data Summit chatbot where the Cleanlab booth was. It replied: “I couldn’t find any information…” and spit out some code. 🤖💥 It's a good thing we’re here! We exist to make AI answers more trustworthy, even at AI conferences. 😎

Introducing the fastest path to AI Agents that don't produce incorrect responses: - Power them with your data using @llama_index - Make them trustworthy using Cleanlab

New integration: @CleanlabAI + LlamaIndex LlamaIndex lets you build AI knowledge assistants and production agents that generate insights from enterprise data. Cleanlab makes their responses trustworthy. Add Cleanlab to: • Score trust for every LLM response • Catch…

New integration: Cleanlab + @weaviate_io! Build and scale Agents & RAG with Weaviate. Then add Cleanlab to: - Score trust for every LLM response - Flag hallucinations in real time - Deploy safely with any LLM 📷weaviate.io/developers/int…

Curious about how to systematically evaluate and improve the trustworthiness of your LLM applications? 🤔 Check out how @CleanlabAI's Trustworthy Language Models (TLM) integrates with #MLflow! TLM analyzes both prompts and responses to flag potentially untrustworthy outputs-no…

🔍 Unlock generative AI success with quality data! Join #AWS & @CleanlabAI for an exclusive workshop at SFO Gen AI Loft on May 9, 2025. Learn to build & scale production-ready AI solutions from experts. For developers & decision-makers. Register now! 👉 go.aws/3SeOxwU

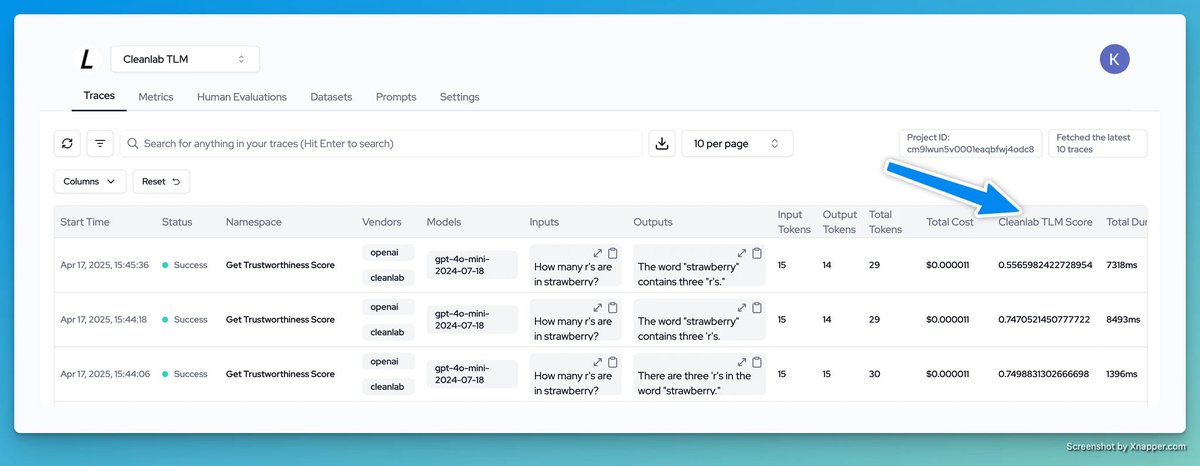

New: @langtrace_ai now includes native support for Cleanlab! Log trust scores, explanations, and metadata for every LLM response—automatically. Instantly surface risky or low-quality outputs. 📝 Blog: langtrace.ai/blog/langtrace… 💻 Docs: docs.langtrace.ai/supported-inte…