Bud Ecosystem

@BudEcosystem

GenAI Made Practical, Profitable and Scalable!

As an opinionated platform, Bud Runtime abstracts away the complexities of GenAI deployment and recommends the best choices for you—such as optimal deployment configurations, appropriate hardware sizing, the most suitable models and clusters for your use case, and more. In…

This week, we released a new open-source project: 𝗕𝘂𝗱 𝗦𝘆𝗺𝗯𝗼𝗹𝗶𝗰 𝗔𝗜 — a framework designed to bridge traditional pattern matching (like regex and Cucumber expressions) with semantic understanding driven by embeddings. It delivers a unified expression framework that…

Some of our researchers are exploring a crazy new idea — can a vector database predict the next token like an autoregressive LLM? It's early days, but we're seriously curious and a little obsessed. We'll keep you posted as the exploration unfolds. Got a geeky friend who lives…

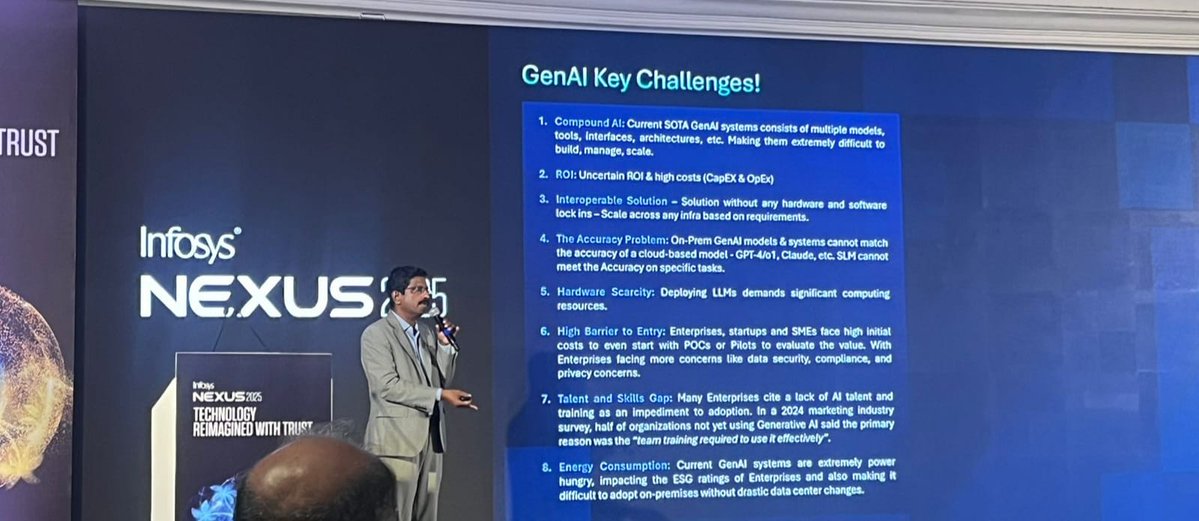

We had a great time at Infosys Nexus 2025! It was an incredible opportunity to share our latest innovations in making generative AI more accessible for everyone. Thanks to everyone who stopped by, asked questions, and sparked meaningful conversations. We're excited about what…

What’s new in LLM inference optimization? New techniques are improving latency, cutting costs, and boosting scalability every week. Check out the latest advances that can help you optimize your GenAI deployments & reduce TCO: bud.studio/content/whats-… AI devs — did we miss any…

🙌 Prepped and pumped to share what we’ve been building in GenAI — can’t wait for the chats and live demos! #BudEcosystem

Training and deploying LLMs or diffusion models demand massive compute, making the total cost of ownership (TCO) a serious concern for teams building production-grade systems. To make GenAI cost-effective and scalable, you need to squeeze out every bit of performance from your…

India's best bet to achieve AI sovereignty is to adopt a 𝗖𝗣𝗨-𝗳𝗶𝗿𝘀𝘁 𝗮𝗻𝗱 𝘀𝗼𝗳𝘁𝘄𝗮𝗿𝗲 𝗶𝗻𝗻𝗼𝘃𝗮𝘁𝗶𝗼𝗻-𝗳𝗶𝗿𝘀𝘁 𝘀𝘁𝗿𝗮𝘁𝗲𝗴𝘆, focusing on frugal, resilient, and scalable approaches rather than trying to match the compute-intensive infrastructure of global…

We’ve made a major upgrade to our #LLM Evaluation Framework — making it even more powerful, transparent, and scalable for enterprise #AI workflows. ✅ Instantly evaluate LLMs across 100+ datasets ✅ Compare models using real, reproducible metrics ✅ Customize benchmarks to match…

Here is a demo of a real-time Audio-to-Audio system that uses tool calling, RAG, LLM, STT, and TTS, all running on a single 5th Generation Intel Xeon. The system supports high concurrency and is production-ready, delivering a seamless real-time Audio-to-Audio experience at scale…

Check out this discussion on one of our recent works with Intel - Accelerating Embedding Model Inference and Deployment Using Bud Latent. Bud Latent is purpose-built to optimize inference performance for embedding models at enterprise scale. It delivers up to 90% faster…

Check out this discussion on one of our research papers —Reward Based Token Modelling with Selective Cloud Assistance. Our research outlines an approach that combines SLMs and LLMs to enable cost-effective, high-performance inference. By introducing a reward-based mechanism that…

It was our privilege to collaborate with Intel in presenting to representatives from government agencies and enterprises, sharing practical strategies for adopting AI that are cost-effective, high-performing, and scalable. @IntelIndia #IntelAI #BudEcosystem

As GenAI deployments scale, models often span multiple clusters — across cloud, on-prem, or even hybrid environments. Managing these distributed clusters, however, quickly became a headache for us, turning into a complex and time-consuming task. And we’re sure this is a problem…

Check out this discussion on one of our research papers — Inference Acceleration for Large Language Models on CPUs. Our research outlines an approach to accelerate inference for LLMs on CPUs. Experiments conducted on #Intel #Xeon demonstrate a significant boost in throughput,…

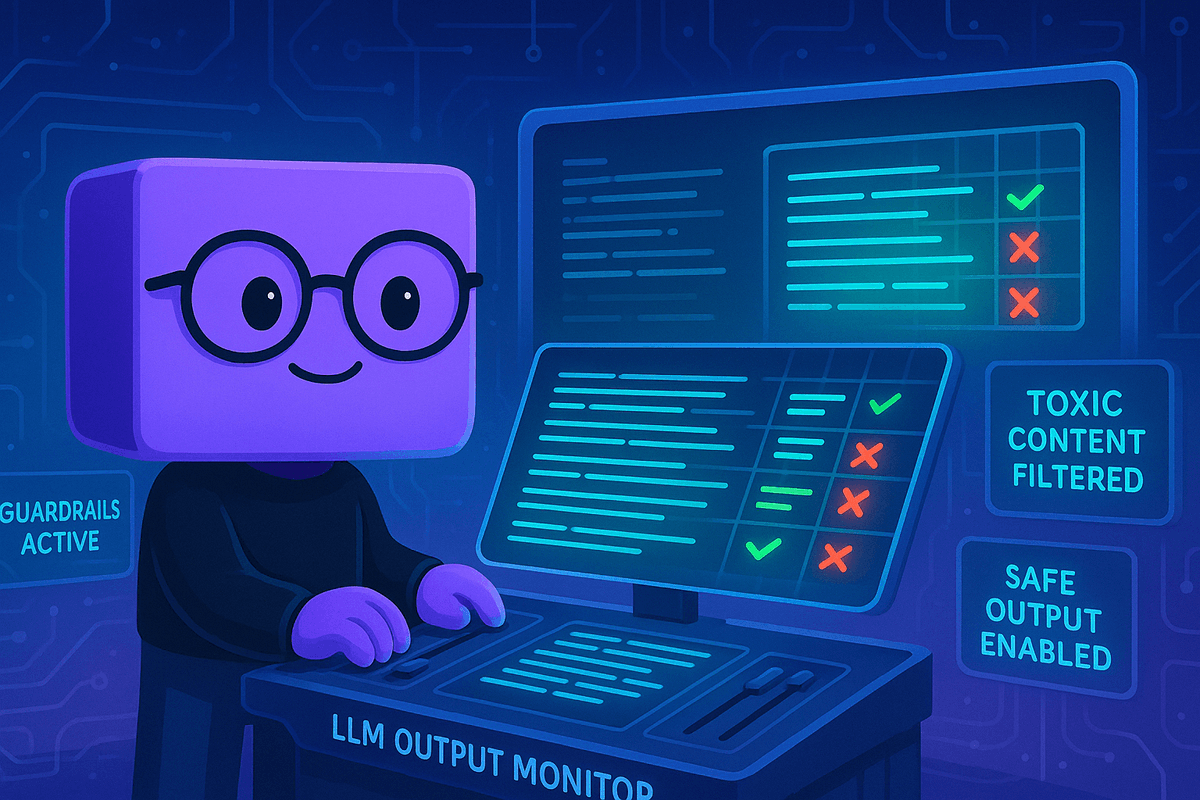

Even the best-aligned models can make mistakes, and that’s why we use guardrails. But what if the guardrails you use are themselves flawed? A faulty guardrail is a risk you can’t afford and that’s why it’s critical to test and validate your guardrails rigorously. In this…

Deploying LLM guardrails? Balance safety and performance! Our latest article covers: ⚙️ Low-latency guardrail techniques 🧠 Prompt injection defenses 💾 Caching, tiered checks, & optimizations 📜 Compliance (GDPR, HIPAA, etc.) Read more 👉 bud.studio/content/a-surv… #LLM…