Brando Miranda @ ICML 2025

@BrandoHablando

CS Ph.D. @Stanford, researching data quality, foundation models, and ML for Theorem Proving. Prev: @MIT, @MIT_CBMM, @IllinoisCS, @IBM. Opinions are mine. 🇲🇽

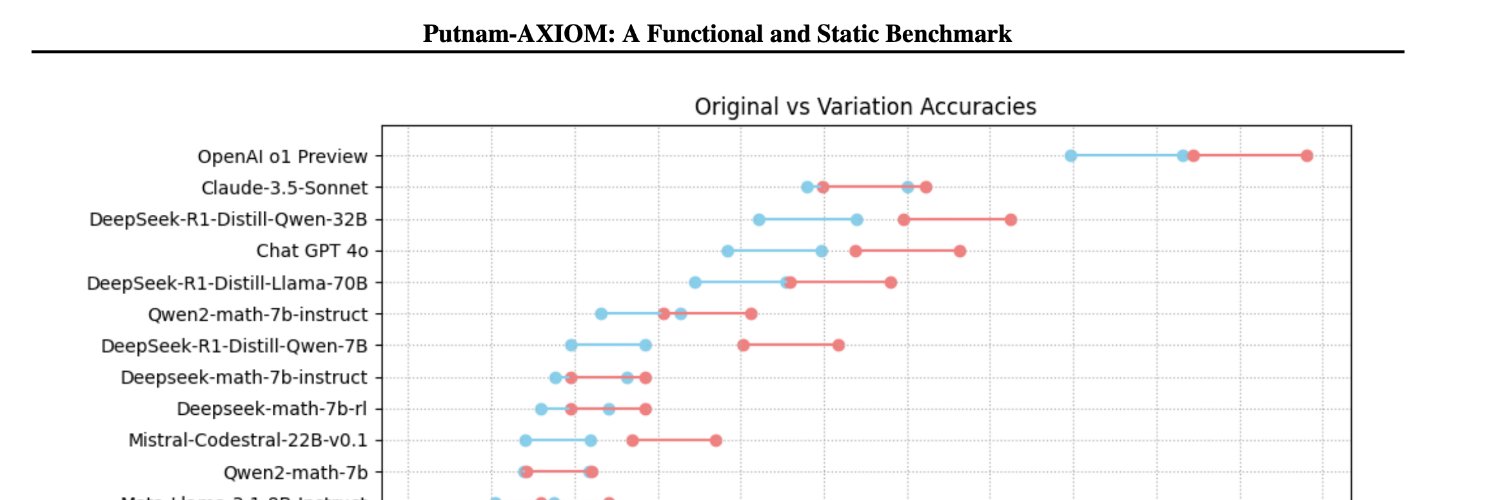

🚨 Can your LLM really do math—or is it cramming the test set? 📢 Meet Putnam-AXIOM, a advanced mathematics contamination-resilient benchmark that finally hurts FMs. 1. openreview.net/forum?id=kqj2C… 2. icml.cc/virtual/2025/p… #ICML2025 East Exhibition Hall A-B, #E-2502 🧵1/14

🚀 Excited to share that the Workshop on Mathematical Reasoning and AI (MATH‑AI) will be at NeurIPS 2025! 📅 Dec 6 or 7 (TBD), 2025 🌴 San Diego, California

Data work is often derided like this and is consistently dismissed by large swaths of the community. This is why data research is the single most underinvested area of ML research relative to its impact. Incidentally, this is also why I believe we need an organization…

Academia must be the only industry where extremely high-skilled PhD students spend much of their time doing low value work (like data cleaning). A 1st year management consultant outsources this immediately. Imagine the productivity gains if PhDs could focus on thinking

Completely misses the point. Nobody is suggesting that solving IMO problems is useful for math research. The point is that AI has become really good at complex reasoning, and is not just memorizing its training data. It can handle completely new IMO questions designed by a…

Quote of the day: I certainly don't agree that machines which can solve IMO problems will be useful for mathematicians doing research, in the same way that when I arrived in Cambridge UK as an undergraduate clutching my IMO gold medal I was in no position to help any of the…

Ok, now that ICML is officially over...I guess it's time to delete my twitter app again and lock in to deep life/work? :)💪🧠

Come to EXAIT today for our best paper talk at 9:05 and poster session 11:45-2-15! The workshop also has a great set of talks by Dylan Foster, Wenhao Yu, Masatoshi Uehara, Natasha Jaques, Jeff Clune, Sergey Levine, Andrea Krause, and Alison Gopnik! exait-workshop.github.io/schedule/

Provably Learning from Language Feedback TLDR: RL theory can help us do better inference-time exploration with feedback. Work done with @wanqiao_xu, @ruijie_zheng12, @chinganc_rl, @adityamodi94, @adith387 📰 arxiv.org/pdf/2506.10341 📍EXAIT Best Paper/Oral Sat 8:45-9:30 am

Beyond Scale: the Diversity Coefficient as a Data Quality Metric Demonstrates LLMs are Pre-trained on Formally Diverse Data paper page: huggingface.co/papers/2306.13… Current trends to pre-train capable Large Language Models (LLMs) mostly focus on scaling of model and dataset size.…

Come to Convention Center West room 208-209 2nd floor to learn about optimal data selection using compression like gzip! tldr; you can learn much faster if you use gzip compression distances to select data given a task! DM if you are interested or what to use the code!

🚨 What’s the best way to select data for fine-tuning LLMs effectively? 📢Introducing ZIP-FIT—a compression-based data selection framework that outperforms leading baselines, achieving up to 85% faster convergence in cross-entropy loss, and selects data up to 65% faster. 🧵1/8

If you missed @wanqiao_xu’s presentation, here are some of our slides! (The workshop will post full slides later on their website) Paper: arxiv.org/abs/2506.10341

Academia must be the only industry where extremely high-skilled PhD students spend much of their time doing low value work (like data cleaning). A 1st year management consultant outsources this immediately. Imagine the productivity gains if PhDs could focus on thinking

It's happening today! 📍Location: West Ballroom C, Vancouver Convention Center ⌚️Time: 8:30 am - 6:00 pm 🎥 Livestream: icml.cc/virtual/2025/w… #ICML2025 #icml25 #icml #aiformath #ai4math #workshop