Nicola Branchini

@Branchini_Nic

🇮🇹 4th yr @ELLISforEurope Stats PhD @EdinUniMaths 🏴.🤔💭 about importance sampling, reliable uncertainty quantification. Visiting @avehtari 🇫🇮

🚨 New paper: “Towards Adaptive Self-Normalized IS” TLDR; To estimate µ = E_p[f(θ)] when p(θ) has intractable partition, instead of doing MCMC on p(θ) or learning a parametric q(θ), we try MCMC directly on p(θ)| f(θ)-µ | - variance-minimizing proposal. arxiv.org/abs/2505.00372

In Chicago 🌆 for MCM 2025 fjhickernell.github.io/mcm2025/prog... Will give a talk on our recent/ongoing works on self-normalized importance sampling, including learning a proposal with MCMC and ratio diagnostics. branchini.fun/pubs

Wrote my first blog post! I wanted to share a powerful yet under-recognized way to develop emotional maturity as a researcher: making it a habit to read about the ✨past ✨ and learn from it to make sense of the present

Obsession with statistics or not .. better make sure evaluations make sense and we're not tuning the random seed. Don't need to know all the fanciest statistical tests, but doesn't seem like that's the issue typically (to me) 🤷♂️ Heard of some statistical precipices ..

.@Finnair it seems impossible to contact the customer service by chat. I've waited 2 afternoons already. I don't want to change my phone plan to have to call from abroad.

You don't _need_ a PhD (or any qualification) to do almost anything. A PhD is a rare opportunity to grow as an independent thinker in an academic environment, rather than immediately becoming a gear in a corporate agenda. It's definitely not for everyone!

You don’t need a PhD to be a great AI researcher. Even @OpenAI’s Chief Research Officer doesn’t have a PhD.

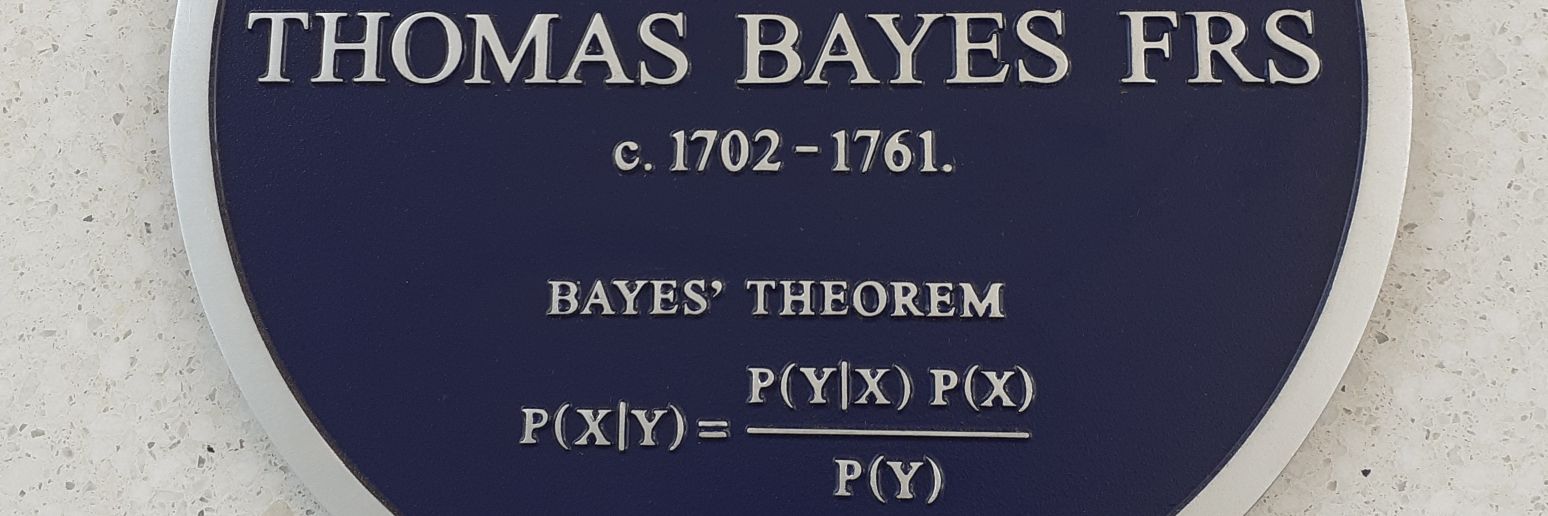

A great pleasure to crash two Bayesian statistics conferences with a dose of diffusion wisdom — last week in Singapore (bayescomp2025.sg), now in Cambridge (newton.ac.uk/event/rclw03/) — with the two authors of this very nice paper.

🚨 New paper: “Towards Adaptive Self-Normalized IS” TLDR; To estimate µ = E_p[f(θ)] when p(θ) has intractable partition, instead of doing MCMC on p(θ) or learning a parametric q(θ), we try MCMC directly on p(θ)| f(θ)-µ | - variance-minimizing proposal. arxiv.org/abs/2505.00372

That’s from 2018, a provocative title. The “problem” has only gotten worse since then. I tend to agree with his arguments. One additional advantage of writing fewer papers, which isn’t mentioned in the talk, is that it gives academic labs time to acquire new knowledge through…

Turing Award winner Michael Stonebraker suggests that the current "diarrhea of papers" is not healthy for science #slowscience – Source: youtu.be/DJFKl_5JTnA?t=…

Upon graduation, I paused to reflect on what my PhD had truly taught me. Was it just how to write papers, respond to brutal reviewer comments, and survive without much sleep? Or did it leave a deeper imprint on me — beyond the metrics and milestones? Turns out, it did. A…

In my reading experience, TMLR (largely) is NeurIPS/ICML etc without all the BS

I got recommended Terence Tao's YouTube channel created in 2010, where he uploaded his first video just yesterday! He showcases his process of formalizing a proof in Lean 4 with the help of GitHub Copilot and the "canonical" tactic in Lean.

Two years ago, I've reoriented my research to try to make AI safe by design. In this @TIME op-ed, I present my team's direction called "Scientist AI"; a practical, effective and more secure alternative to the current uncontrolled agency-driven trajectory. time.com/7283507/safer-…

BayesFlow 2.0, a Python package for amortized Bayesian inference, is now powered by Keras 3, with support for JAX, PyTorch, and TF. Great tool for statistical inference fueled by recent advances in generative AI and Bayesian inference. (Links in next tweet)