Aditya Yedetore

@AdityaYedetore

Graduate Student at Boston University in the Linguistics Department. Studying language acqusition, syntax, and semantics

I am helping organize BUCLD this year! Please consider submitting your abstracts and symposium ideas. bu.edu/bucld/

Does vision training change how language is represented and used in meaningful ways?🤔 The answer is a nuanced yes! Comparing VLM-LM minimal pairs, we find that while the taxonomic organization of the lexicon is similar, VLMs are better at _deploying_ this knowledge. [1/9]

Here's work where we try to take analogies quite seriously, plus our takes about LLM experiments serving to raise the bar for what we are willing to take as behavioral evidence for things like composition 💇♀️

New paper with @najoungkim and @TeaAnd_OrCoffee! We previously found that humans and LLMs generalize to novel adjective-noun inferences (e.g. "Is a homemade currency still a currency?") Turns out analogy isn't enough to generalize, so it's likely evidence of composition! 🧵

I will be at #EMNLP2024 with some awesome tinlab people. Contact me if you would like to chat (esp. about 1. the similarities and differences between Connectionist/neural and Classical/symbolic computation, and 2. what compositionality, productivity, and systematicity are)

tinlab will be at #EMNLP2024! A few highlights: * Presentations from @AdityaYedetore and @HayleyRossLing on neural network generalizations! * I'm giving a keynote at @GenBench & organizing @BlackboxNLP * Ask me about our faculty hiring & PhD/postdoc positions! Details👇🧵

tinlab at Boston University (with a new logo! 🪄) is recruiting PhD students for F25 and/or a postdoc! Our interests include meaning, generalization, evaluation design, and the nature of computation/representation underlying language and cognition, in both humans and machines. ⬇️

I am at @COLM_conf till tomorrow night. Let me know if you would like to chat!

I’ll be at the LSA annual meeting this week, and happy to chat! Message me if you would like to

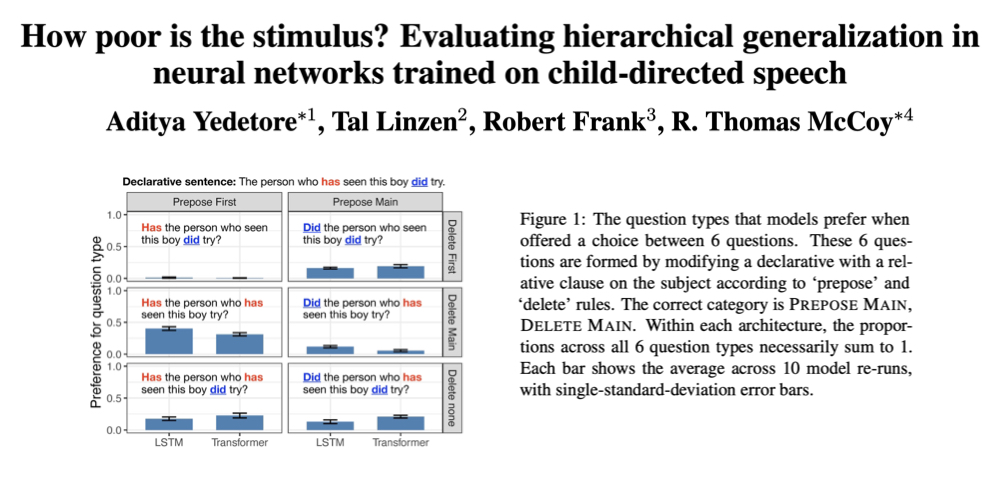

🌲Interested in language acquisition and/or neural networks? Check out our poster today at #acl2023nlp! Session 4 posters, 11:00-12:30 🌲 Elevator pitch: Train language models on child-directed speech to test "poverty of the stimulus" claims Paper: aclanthology.org/2023.acl-long.…

NEW PREPRINT Excited to release my first first-author paper! We investigate if neural network learners (LSTMs and Transformers) generalize to the hierarchical structure of language when trained on the amount of data children receive. Paper: aps.arxiv.org/abs/2301.11462